mirror of

https://github.com/paperless-ngx/paperless-ngx.git

synced 2025-04-13 10:03:49 -05:00

Compare commits

308 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

f269919410 | ||

|

|

5f00066dff | ||

|

|

705f542129 | ||

|

|

f036292b72 | ||

|

|

f8a43d5dab | ||

|

|

53c106d448 | ||

|

|

0f37186c88 | ||

|

|

0fb55f3ae8 | ||

|

|

78822f6121 | ||

|

|

2ee1d7540e | ||

|

|

43b2527275 | ||

|

|

248b573c03 | ||

|

|

b9f7428f2f | ||

|

|

e35dad81d9 | ||

|

|

7d40eb2e24 | ||

|

|

d2783a3fbe | ||

|

|

8ad794e189 | ||

|

|

358db10fe3 | ||

|

|

556f47fe12 | ||

|

|

0d5a2b4382 | ||

|

|

c9bc9acd1a | ||

|

|

e9e209d290 | ||

|

|

2e593a0022 | ||

|

|

3526a4cf23 | ||

|

|

f4791cac2d | ||

|

|

fdafd4eefb | ||

|

|

87a8847a8d | ||

|

|

7c31c79bbc | ||

|

|

bf2a9b02c6 | ||

|

|

348858780c | ||

|

|

eb481ac1c0 | ||

|

|

9a2d7a64ac | ||

|

|

32a7f9cd5a | ||

|

|

92431b2f4b | ||

|

|

b4b2a92225 | ||

|

|

fd45e81a83 | ||

|

|

97d59dce9c | ||

|

|

f3479d982c | ||

|

|

b3ba673d9a | ||

|

|

68b7427640 | ||

|

|

9c68100dc0 | ||

|

|

6e694ad9ff | ||

|

|

5db511afdf | ||

|

|

a8de26f88a | ||

|

|

7a07f1e81d | ||

|

|

92524ae97a | ||

|

|

1c89f6da24 | ||

|

|

d1a3e3b859 | ||

|

|

79ae594d54 | ||

|

|

f753f6dc46 | ||

|

|

97fe5c4176 | ||

|

|

1f5086164b | ||

|

|

e60bd3a132 | ||

|

|

b4047e73bb | ||

|

|

4263d2196c | ||

|

|

ac780134fb | ||

|

|

5d6cfa7349 | ||

|

|

3105317137 | ||

|

|

1d9482acc3 | ||

|

|

1456169d7f | ||

|

|

22a6fe5e10 | ||

|

|

caa3c13edd | ||

|

|

24e863b298 | ||

|

|

0c9d615f56 | ||

|

|

4c49da9ece | ||

|

|

a0c1a19263 | ||

|

|

1f5d1b6f26 | ||

|

|

3b19a727b8 | ||

|

|

7146a5f4fc | ||

|

|

6babc61ba2 | ||

|

|

db5e54c6e5 | ||

|

|

4ef5fbfb6e | ||

|

|

b0390a92ea | ||

|

|

70898a5064 | ||

|

|

d07d425618 | ||

|

|

1f60f4a636 | ||

|

|

b29395cde7 | ||

|

|

2f70d58219 | ||

|

|

9944f81512 | ||

|

|

f4413e0a08 | ||

|

|

169aa8c8bd | ||

|

|

94556a2607 | ||

|

|

dcd50d5359 | ||

|

|

376823598e | ||

|

|

54bcbfa546 | ||

|

|

5421e54cb0 | ||

|

|

032bada221 | ||

|

|

670ee6c5b0 | ||

|

|

309fb199f2 | ||

|

|

79328b1cec | ||

|

|

5570d20625 | ||

|

|

ba2cb1dec8 | ||

|

|

4d15544a3e | ||

|

|

955ff32dcd | ||

|

|

b746b6f2d6 | ||

|

|

b4b0f802e1 | ||

|

|

5f16d5f5f1 | ||

|

|

8db04398c7 | ||

|

|

485dad01b7 | ||

|

|

cf48f47a8c | ||

|

|

f2667f5afa | ||

|

|

d73118d226 | ||

|

|

dd9e9a8c56 | ||

|

|

76d363f22d | ||

|

|

aaaa6c1393 | ||

|

|

bed82215a0 | ||

|

|

f8aaa5cb32 | ||

|

|

1e489a0666 | ||

|

|

edc7181843 | ||

|

|

89e5c08a1f | ||

|

|

0faa9e8865 | ||

|

|

f205c4d0e2 | ||

|

|

344b2bc0eb | ||

|

|

817aad7c8b | ||

|

|

d82555e644 | ||

|

|

f3e6ed56b9 | ||

|

|

780d1c67e9 | ||

|

|

2b72397a4d | ||

|

|

6c13ffaa01 | ||

|

|

eb8e124971 | ||

|

|

1bc77546eb | ||

|

|

5a453653e2 | ||

|

|

16f17829b6 | ||

|

|

3cf1c04a83 | ||

|

|

bc90ccc555 | ||

|

|

90a332a02c | ||

|

|

0098d1bdd5 | ||

|

|

f6fef18a73 | ||

|

|

6563ec6770 | ||

|

|

755cf8619f | ||

|

|

c6d389100c | ||

|

|

20c4b65273 | ||

|

|

86c94c7508 | ||

|

|

798ece411e | ||

|

|

654c9ca273 | ||

|

|

628d85080f | ||

|

|

865e9fe233 | ||

|

|

0eb765c3e8 | ||

|

|

ddeb741a85 | ||

|

|

b9bcff22f8 | ||

|

|

2d52226732 | ||

|

|

ec34197b59 | ||

|

|

edc0e6f859 | ||

|

|

61cb5103ed | ||

|

|

d364436817 | ||

|

|

827fcba277 | ||

|

|

90561857e8 | ||

|

|

3104417076 | ||

|

|

047f7c3619 | ||

|

|

a548c32c1f | ||

|

|

ea911e73c6 | ||

|

|

6b7fb286f7 | ||

|

|

b40479632b | ||

|

|

c122c60d3f | ||

|

|

4f08b5fa20 | ||

|

|

3bf64ae7da | ||

|

|

822c2d2d56 | ||

|

|

98e0a934ac | ||

|

|

ceffcd6360 | ||

|

|

37442ff829 | ||

|

|

de5f66b3a0 | ||

|

|

e37096f66f | ||

|

|

e49ecd4dfe | ||

|

|

4718df271f | ||

|

|

17bb3ebbf5 | ||

|

|

5e00c1c676 | ||

|

|

fc68f55d1a | ||

|

|

a9ef7ff58e | ||

|

|

518091f856 | ||

|

|

feb30f36df | ||

|

|

bbad36717f | ||

|

|

329ef7aef3 | ||

|

|

2b2115e5f0 | ||

|

|

ba5705a54f | ||

|

|

ea94626b82 | ||

|

|

fdfea68576 | ||

|

|

4e61c2b2e6 | ||

|

|

7c959754a0 | ||

|

|

fed16974dd | ||

|

|

ea3303aa76 | ||

|

|

1dc80f04cb | ||

|

|

c316ae369b | ||

|

|

d94b284815 | ||

|

|

63bb3644f6 | ||

|

|

6a8ec182fa | ||

|

|

880f08599a | ||

|

|

71472a6a82 | ||

|

|

7f36163c3b | ||

|

|

e560fa3be0 | ||

|

|

b274665e21 | ||

|

|

a499905605 | ||

|

|

b8bdc10f25 | ||

|

|

e08606af6e | ||

|

|

52ab07c673 | ||

|

|

046d8456e2 | ||

|

|

3314c59828 | ||

|

|

2103a499eb | ||

|

|

7ab779e78a | ||

|

|

49390c9427 | ||

|

|

6f29d64325 | ||

|

|

69541546ea | ||

|

|

065724befb | ||

|

|

e877beea4e | ||

|

|

3e848e6e0f | ||

|

|

2e5656e1ce | ||

|

|

438650bf17 | ||

|

|

7df0b621a5 | ||

|

|

4cd755f641 | ||

|

|

8597911d85 | ||

|

|

befb80bddf | ||

|

|

7af6983cab | ||

|

|

16d6bb7334 | ||

|

|

49b658a944 | ||

|

|

e1d8680698 | ||

|

|

ee72e2d1fd | ||

|

|

e0ea4a4625 | ||

|

|

c2a9ac332a | ||

|

|

bf368aadd0 | ||

|

|

54e72d5b60 | ||

|

|

cf7422346a | ||

|

|

5b8c9ef5fc | ||

|

|

f56974f158 | ||

|

|

427508edf1 | ||

|

|

311b259cff | ||

|

|

fce7b03324 | ||

|

|

79956d6a7b | ||

|

|

978b072bff | ||

|

|

9c6f695dbf | ||

|

|

0100fcbb23 | ||

|

|

1745da0d60 | ||

|

|

e4e906ce2b | ||

|

|

80c7b97fec | ||

|

|

270e70a958 | ||

|

|

082bf6fb8e | ||

|

|

38296d9426 | ||

|

|

8311313e6e | ||

|

|

ac292999ef | ||

|

|

f3cda54cd1 | ||

|

|

8f9a294529 | ||

|

|

702de0cac3 | ||

|

|

ca42762841 | ||

|

|

13cfd6f904 | ||

|

|

18c4e6029f | ||

|

|

6c34e37838 | ||

|

|

2c28348b56 | ||

|

|

79e541244e | ||

|

|

74afad5976 | ||

|

|

c694c9791b | ||

|

|

11ceb8bde5 | ||

|

|

20ec8cb57b | ||

|

|

bfc11a545b | ||

|

|

4866af31cb | ||

|

|

0ea4da03a7 | ||

|

|

41bcc12cc2 | ||

|

|

475c231c6f | ||

|

|

e00dd46b22 | ||

|

|

fd425aa618 | ||

|

|

e1dde85c59 | ||

|

|

01207a284d | ||

|

|

0f863ab378 | ||

|

|

258064b339 | ||

|

|

2bcb37f3e9 | ||

|

|

81f8c64b2c | ||

|

|

3b80112521 | ||

|

|

459feea31e | ||

|

|

eea5839390 | ||

|

|

c79414ebd9 | ||

|

|

ed1775e689 | ||

|

|

cd50f20a20 | ||

|

|

c8ec70c05f | ||

|

|

5e3ee3a80d | ||

|

|

29726c3ce1 | ||

|

|

6804c92861 | ||

|

|

a32077566b | ||

|

|

283bcb4c91 | ||

|

|

f68ee628d9 | ||

|

|

bd5ba97ee8 | ||

|

|

325594034e | ||

|

|

28261ac51c | ||

|

|

23ef52f405 | ||

|

|

2b45793bc2 | ||

|

|

2f96cc0050 | ||

|

|

d36e8254f3 | ||

|

|

405fab8514 | ||

|

|

ee4f62a1b3 | ||

|

|

d97e4a9a95 | ||

|

|

1cfba87114 | ||

|

|

b145ed315a | ||

|

|

1f47b8c090 | ||

|

|

1e3f2a1438 | ||

|

|

d61b2bbfc6 | ||

|

|

e1d6b4a9ac | ||

|

|

9f398337c6 | ||

|

|

765bf1d11c | ||

|

|

49a96ccee0 | ||

|

|

c45bbcaea2 | ||

|

|

ab87aedfc7 | ||

|

|

18e3ad8b22 | ||

|

|

c342eafa8d | ||

|

|

290c44f5d5 | ||

|

|

02015ec404 | ||

|

|

98b4f447d8 | ||

|

|

272691e386 | ||

|

|

1012cee39a | ||

|

|

19a5733d0d | ||

|

|

86788f1445 | ||

|

|

1d5e7e930e | ||

|

|

da6d568906 |

.codecov.yml

.devcontainer

.dockerignore.editorconfig.github

.gitignore.pre-commit-config.yaml.python-version.ruff.tomlCODEOWNERSCONTRIBUTING.mdDockerfilePipfilePipfile.lockREADME.mddocker

compose

docker-compose.ci-test.ymldocker-compose.mariadb-tika.ymldocker-compose.mariadb.ymldocker-compose.portainer.ymldocker-compose.postgres-tika.ymldocker-compose.postgres.ymldocker-compose.sqlite-tika.ymldocker-compose.sqlite.yml

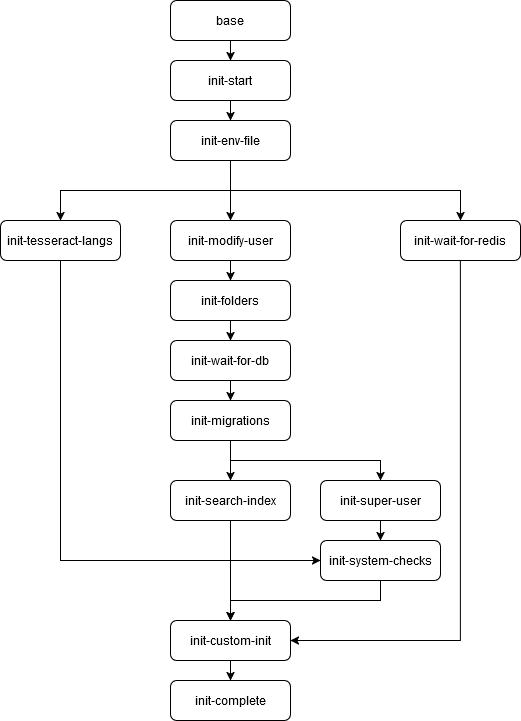

docker-prepare.shenv-from-file.shflower-conditional.shinit-flow.drawio.pnginstall_management_commands.shmanagement_script.shpaperless_cmd.shrootfs/etc

ImageMagick-6

s6-overlay/s6-rc.d

init-complete

dependencies.d

init-custom-initinit-env-fileinit-foldersinit-migrationsinit-modify-userinit-search-indexinit-startinit-superuserinit-system-checksinit-tesseract-langsinit-wait-for-dbinit-wait-for-redis

runtypeupinit-custom-init

init-env-file

init-folders

init-migrations

init-modify-user

init-search-index

init-start

init-superuser

init-system-checks

init-tesseract-langs

16

.codecov.yml

16

.codecov.yml

@ -1,18 +1,18 @@

|

|||||||

codecov:

|

codecov:

|

||||||

require_ci_to_pass: true

|

require_ci_to_pass: true

|

||||||

# https://docs.codecov.com/docs/flags#recommended-automatic-flag-management

|

# https://docs.codecov.com/docs/components

|

||||||

# Require each flag to have 1 upload before notification

|

component_management:

|

||||||

flag_management:

|

individual_components:

|

||||||

individual_flags:

|

- component_id: backend

|

||||||

- name: backend

|

|

||||||

paths:

|

paths:

|

||||||

- src/

|

- src/**

|

||||||

- name: frontend

|

- component_id: frontend

|

||||||

paths:

|

paths:

|

||||||

- src-ui/

|

- src-ui/**

|

||||||

# https://docs.codecov.com/docs/pull-request-comments

|

# https://docs.codecov.com/docs/pull-request-comments

|

||||||

# codecov will only comment if coverage changes

|

# codecov will only comment if coverage changes

|

||||||

comment:

|

comment:

|

||||||

|

layout: "header, diff, components, flags, files"

|

||||||

require_changes: true

|

require_changes: true

|

||||||

# https://docs.codecov.com/docs/javascript-bundle-analysis

|

# https://docs.codecov.com/docs/javascript-bundle-analysis

|

||||||

require_bundle_changes: true

|

require_bundle_changes: true

|

||||||

|

|||||||

@ -76,18 +76,15 @@ RUN set -eux \

|

|||||||

&& apt-get update \

|

&& apt-get update \

|

||||||

&& apt-get install --yes --quiet --no-install-recommends ${RUNTIME_PACKAGES}

|

&& apt-get install --yes --quiet --no-install-recommends ${RUNTIME_PACKAGES}

|

||||||

|

|

||||||

ARG PYTHON_PACKAGES="\

|

ARG PYTHON_PACKAGES="ca-certificates"

|

||||||

python3 \

|

|

||||||

python3-pip \

|

|

||||||

python3-wheel \

|

|

||||||

pipenv \

|

|

||||||

ca-certificates"

|

|

||||||

|

|

||||||

RUN set -eux \

|

RUN set -eux \

|

||||||

echo "Installing python packages" \

|

echo "Installing python packages" \

|

||||||

&& apt-get update \

|

&& apt-get update \

|

||||||

&& apt-get install --yes --quiet ${PYTHON_PACKAGES}

|

&& apt-get install --yes --quiet ${PYTHON_PACKAGES}

|

||||||

|

|

||||||

|

COPY --from=ghcr.io/astral-sh/uv:0.6 /uv /bin/uv

|

||||||

|

|

||||||

RUN set -eux \

|

RUN set -eux \

|

||||||

&& echo "Installing pre-built updates" \

|

&& echo "Installing pre-built updates" \

|

||||||

&& echo "Installing qpdf ${QPDF_VERSION}" \

|

&& echo "Installing qpdf ${QPDF_VERSION}" \

|

||||||

@ -123,13 +120,15 @@ RUN set -eux \

|

|||||||

WORKDIR /usr/src/paperless/src/docker/

|

WORKDIR /usr/src/paperless/src/docker/

|

||||||

|

|

||||||

COPY [ \

|

COPY [ \

|

||||||

"docker/imagemagick-policy.xml", \

|

"docker/rootfs/etc/ImageMagick-6/paperless-policy.xml", \

|

||||||

"./" \

|

"./" \

|

||||||

]

|

]

|

||||||

|

|

||||||

RUN set -eux \

|

RUN set -eux \

|

||||||

&& echo "Configuring ImageMagick" \

|

&& echo "Configuring ImageMagick" \

|

||||||

&& mv imagemagick-policy.xml /etc/ImageMagick-6/policy.xml

|

&& mv paperless-policy.xml /etc/ImageMagick-6/policy.xml

|

||||||

|

|

||||||

|

COPY --from=ghcr.io/astral-sh/uv:0.6 /uv /bin/uv

|

||||||

|

|

||||||

# Packages needed only for building a few quick Python

|

# Packages needed only for building a few quick Python

|

||||||

# dependencies

|

# dependencies

|

||||||

@ -140,18 +139,17 @@ ARG BUILD_PACKAGES="\

|

|||||||

libpq-dev \

|

libpq-dev \

|

||||||

# https://github.com/PyMySQL/mysqlclient#linux

|

# https://github.com/PyMySQL/mysqlclient#linux

|

||||||

default-libmysqlclient-dev \

|

default-libmysqlclient-dev \

|

||||||

pkg-config \

|

pkg-config"

|

||||||

pre-commit"

|

|

||||||

|

|

||||||

# hadolint ignore=DL3042

|

# hadolint ignore=DL3042

|

||||||

RUN --mount=type=cache,target=/root/.cache/pip/,id=pip-cache \

|

RUN --mount=type=cache,target=/root/.cache/uv,id=pip-cache \

|

||||||

set -eux \

|

set -eux \

|

||||||

&& echo "Installing build system packages" \

|

&& echo "Installing build system packages" \

|

||||||

&& apt-get update \

|

&& apt-get update \

|

||||||

&& apt-get install --yes --quiet ${BUILD_PACKAGES}

|

&& apt-get install --yes --quiet ${BUILD_PACKAGES}

|

||||||

|

|

||||||

RUN set -eux \

|

RUN set -eux \

|

||||||

&& npm update npm -g

|

&& npm update -g pnpm

|

||||||

|

|

||||||

# add users, setup scripts

|

# add users, setup scripts

|

||||||

# Mount the compiled frontend to expected location

|

# Mount the compiled frontend to expected location

|

||||||

@ -169,9 +167,6 @@ RUN set -eux \

|

|||||||

&& mkdir --parents --verbose /usr/src/paperless/paperless-ngx/.venv \

|

&& mkdir --parents --verbose /usr/src/paperless/paperless-ngx/.venv \

|

||||||

&& echo "Adjusting all permissions" \

|

&& echo "Adjusting all permissions" \

|

||||||

&& chown --from root:root --changes --recursive paperless:paperless /usr/src/paperless

|

&& chown --from root:root --changes --recursive paperless:paperless /usr/src/paperless

|

||||||

# && echo "Collecting static files" \

|

|

||||||

# && gosu paperless python3 manage.py collectstatic --clear --no-input --link \

|

|

||||||

# && gosu paperless python3 manage.py compilemessages

|

|

||||||

|

|

||||||

VOLUME ["/usr/src/paperless/paperless-ngx/data", \

|

VOLUME ["/usr/src/paperless/paperless-ngx/data", \

|

||||||

"/usr/src/paperless/paperless-ngx/media", \

|

"/usr/src/paperless/paperless-ngx/media", \

|

||||||

|

|||||||

117

.devcontainer/README.md

Normal file

117

.devcontainer/README.md

Normal file

@ -0,0 +1,117 @@

|

|||||||

|

# Paperless-ngx Development Environment

|

||||||

|

|

||||||

|

## Overview

|

||||||

|

|

||||||

|

Welcome to the Paperless-ngx development environment! This setup uses VSCode DevContainers to provide a consistent and seamless development experience.

|

||||||

|

|

||||||

|

### What are DevContainers?

|

||||||

|

|

||||||

|

DevContainers are a feature in VSCode that allows you to develop within a Docker container. This ensures that your development environment is consistent across different machines and setups. By defining a containerized environment, you can eliminate the "works on my machine" problem.

|

||||||

|

|

||||||

|

### Advantages of DevContainers

|

||||||

|

|

||||||

|

- **Consistency**: Same environment for all developers.

|

||||||

|

- **Isolation**: Separate development environment from your local machine.

|

||||||

|

- **Reproducibility**: Easily recreate the environment on any machine.

|

||||||

|

- **Pre-configured Tools**: Include all necessary tools and dependencies in the container.

|

||||||

|

|

||||||

|

## DevContainer Setup

|

||||||

|

|

||||||

|

The DevContainer configuration provides up all the necessary services for Paperless-ngx, including:

|

||||||

|

|

||||||

|

- Redis

|

||||||

|

- Gotenberg

|

||||||

|

- Tika

|

||||||

|

|

||||||

|

Data is stored using Docker volumes to ensure persistence across container restarts.

|

||||||

|

|

||||||

|

## Configuration Files

|

||||||

|

|

||||||

|

The setup includes debugging configurations (`launch.json`) and tasks (`tasks.json`) to help you manage and debug various parts of the project:

|

||||||

|

|

||||||

|

- **Backend Debugging:**

|

||||||

|

- `manage.py runserver`

|

||||||

|

- `manage.py document-consumer`

|

||||||

|

- `celery`

|

||||||

|

- **Maintenance Tasks:**

|

||||||

|

- Create superuser

|

||||||

|

- Run migrations

|

||||||

|

- Recreate virtual environment (`.venv` with `uv`)

|

||||||

|

- Compile frontend assets

|

||||||

|

|

||||||

|

## Getting Started

|

||||||

|

|

||||||

|

### Step 1: Running the DevContainer

|

||||||

|

|

||||||

|

To start the DevContainer:

|

||||||

|

|

||||||

|

1. Open VSCode.

|

||||||

|

2. Open the project folder.

|

||||||

|

3. Open the command palette:

|

||||||

|

- **Windows/Linux**: `Ctrl+Shift+P`

|

||||||

|

- **Mac**: `Cmd+Shift+P`

|

||||||

|

4. Type and select `Dev Containers: Rebuild and Reopen in Container`.

|

||||||

|

|

||||||

|

VSCode will build and start the DevContainer environment.

|

||||||

|

|

||||||

|

### Step 2: Initial Setup

|

||||||

|

|

||||||

|

Once the DevContainer is up and running, perform the following steps:

|

||||||

|

|

||||||

|

1. **Compile Frontend Assets**:

|

||||||

|

|

||||||

|

- Open the command palette:

|

||||||

|

- **Windows/Linux**: `Ctrl+Shift+P`

|

||||||

|

- **Mac**: `Cmd+Shift+P`

|

||||||

|

- Select `Tasks: Run Task`.

|

||||||

|

- Choose `Frontend Compile`.

|

||||||

|

|

||||||

|

2. **Run Database Migrations**:

|

||||||

|

|

||||||

|

- Open the command palette:

|

||||||

|

- **Windows/Linux**: `Ctrl+Shift+P`

|

||||||

|

- **Mac**: `Cmd+Shift+P`

|

||||||

|

- Select `Tasks: Run Task`.

|

||||||

|

- Choose `Migrate Database`.

|

||||||

|

|

||||||

|

3. **Create Superuser**:

|

||||||

|

- Open the command palette:

|

||||||

|

- **Windows/Linux**: `Ctrl+Shift+P`

|

||||||

|

- **Mac**: `Cmd+Shift+P`

|

||||||

|

- Select `Tasks: Run Task`.

|

||||||

|

- Choose `Create Superuser`.

|

||||||

|

|

||||||

|

### Debugging and Running Services

|

||||||

|

|

||||||

|

You can start and debug backend services either as debugging sessions via `launch.json` or as tasks.

|

||||||

|

|

||||||

|

#### Using `launch.json`

|

||||||

|

|

||||||

|

1. Press `F5` or go to the **Run and Debug** view in VSCode.

|

||||||

|

2. Select the desired configuration:

|

||||||

|

- `Runserver`

|

||||||

|

- `Document Consumer`

|

||||||

|

- `Celery`

|

||||||

|

|

||||||

|

#### Using Tasks

|

||||||

|

|

||||||

|

1. Open the command palette:

|

||||||

|

- **Windows/Linux**: `Ctrl+Shift+P`

|

||||||

|

- **Mac**: `Cmd+Shift+P`

|

||||||

|

2. Select `Tasks: Run Task`.

|

||||||

|

3. Choose the desired task:

|

||||||

|

- `Runserver`

|

||||||

|

- `Document Consumer`

|

||||||

|

- `Celery`

|

||||||

|

|

||||||

|

### Additional Maintenance Tasks

|

||||||

|

|

||||||

|

Additional tasks are available for common maintenance operations:

|

||||||

|

|

||||||

|

- **Recreate .venv**: For setting up the virtual environment using `uv`.

|

||||||

|

- **Migrate Database**: To apply database migrations.

|

||||||

|

- **Create Superuser**: To create an admin user for the application.

|

||||||

|

|

||||||

|

## Let's Get Started!

|

||||||

|

|

||||||

|

Follow the steps above to get your development environment up and running. Happy coding!

|

||||||

@ -3,7 +3,7 @@

|

|||||||

"dockerComposeFile": "docker-compose.devcontainer.sqlite-tika.yml",

|

"dockerComposeFile": "docker-compose.devcontainer.sqlite-tika.yml",

|

||||||

"service": "paperless-development",

|

"service": "paperless-development",

|

||||||

"workspaceFolder": "/usr/src/paperless/paperless-ngx",

|

"workspaceFolder": "/usr/src/paperless/paperless-ngx",

|

||||||

"postCreateCommand": "pipenv install --dev && pipenv run pre-commit install",

|

"postCreateCommand": "/bin/bash -c 'uv sync --group dev && uv run pre-commit install'",

|

||||||

"customizations": {

|

"customizations": {

|

||||||

"vscode": {

|

"vscode": {

|

||||||

"extensions": [

|

"extensions": [

|

||||||

|

|||||||

@ -43,7 +43,7 @@ services:

|

|||||||

volumes:

|

volumes:

|

||||||

- ..:/usr/src/paperless/paperless-ngx:delegated

|

- ..:/usr/src/paperless/paperless-ngx:delegated

|

||||||

- ../.devcontainer/vscode:/usr/src/paperless/paperless-ngx/.vscode:delegated # VSCode config files

|

- ../.devcontainer/vscode:/usr/src/paperless/paperless-ngx/.vscode:delegated # VSCode config files

|

||||||

- pipenv:/usr/src/paperless/paperless-ngx/.venv

|

- virtualenv:/usr/src/paperless/paperless-ngx/.venv # Virtual environment persisted in volume

|

||||||

- /usr/src/paperless/paperless-ngx/src/documents/static/frontend # Static frontend files exist only in container

|

- /usr/src/paperless/paperless-ngx/src/documents/static/frontend # Static frontend files exist only in container

|

||||||

- /usr/src/paperless/paperless-ngx/src/.pytest_cache

|

- /usr/src/paperless/paperless-ngx/src/.pytest_cache

|

||||||

- /usr/src/paperless/paperless-ngx/.ruff_cache

|

- /usr/src/paperless/paperless-ngx/.ruff_cache

|

||||||

@ -65,7 +65,7 @@ services:

|

|||||||

command: /bin/sh -c "chown -R paperless:paperless /usr/src/paperless/paperless-ngx/src/documents/static/frontend && chown -R paperless:paperless /usr/src/paperless/paperless-ngx/.ruff_cache && while sleep 1000; do :; done"

|

command: /bin/sh -c "chown -R paperless:paperless /usr/src/paperless/paperless-ngx/src/documents/static/frontend && chown -R paperless:paperless /usr/src/paperless/paperless-ngx/.ruff_cache && while sleep 1000; do :; done"

|

||||||

|

|

||||||

gotenberg:

|

gotenberg:

|

||||||

image: docker.io/gotenberg/gotenberg:7.10

|

image: docker.io/gotenberg/gotenberg:8.17

|

||||||

restart: unless-stopped

|

restart: unless-stopped

|

||||||

|

|

||||||

# The Gotenberg Chromium route is used to convert .eml files. We do not

|

# The Gotenberg Chromium route is used to convert .eml files. We do not

|

||||||

@ -80,4 +80,7 @@ services:

|

|||||||

restart: unless-stopped

|

restart: unless-stopped

|

||||||

|

|

||||||

volumes:

|

volumes:

|

||||||

pipenv:

|

data:

|

||||||

|

media:

|

||||||

|

redisdata:

|

||||||

|

virtualenv:

|

||||||

|

|||||||

@ -5,7 +5,7 @@

|

|||||||

"label": "Start: Celery Worker",

|

"label": "Start: Celery Worker",

|

||||||

"description": "Start the Celery Worker which processes background and consume tasks",

|

"description": "Start the Celery Worker which processes background and consume tasks",

|

||||||

"type": "shell",

|

"type": "shell",

|

||||||

"command": "pipenv run celery --app paperless worker -l DEBUG",

|

"command": "uv run celery --app paperless worker -l DEBUG",

|

||||||

"isBackground": true,

|

"isBackground": true,

|

||||||

"options": {

|

"options": {

|

||||||

"cwd": "${workspaceFolder}/src"

|

"cwd": "${workspaceFolder}/src"

|

||||||

@ -33,7 +33,7 @@

|

|||||||

"label": "Start: Frontend Angular",

|

"label": "Start: Frontend Angular",

|

||||||

"description": "Start the Frontend Angular Dev Server",

|

"description": "Start the Frontend Angular Dev Server",

|

||||||

"type": "shell",

|

"type": "shell",

|

||||||

"command": "npm start",

|

"command": "pnpm start",

|

||||||

"isBackground": true,

|

"isBackground": true,

|

||||||

"options": {

|

"options": {

|

||||||

"cwd": "${workspaceFolder}/src-ui"

|

"cwd": "${workspaceFolder}/src-ui"

|

||||||

@ -61,7 +61,7 @@

|

|||||||

"label": "Start: Consumer Service (manage.py document_consumer)",

|

"label": "Start: Consumer Service (manage.py document_consumer)",

|

||||||

"description": "Start the Consumer Service which processes files from a directory",

|

"description": "Start the Consumer Service which processes files from a directory",

|

||||||

"type": "shell",

|

"type": "shell",

|

||||||

"command": "pipenv run python manage.py document_consumer",

|

"command": "uv run python manage.py document_consumer",

|

||||||

"group": "build",

|

"group": "build",

|

||||||

"presentation": {

|

"presentation": {

|

||||||

"echo": true,

|

"echo": true,

|

||||||

@ -80,7 +80,7 @@

|

|||||||

"label": "Start: Backend Server (manage.py runserver)",

|

"label": "Start: Backend Server (manage.py runserver)",

|

||||||

"description": "Start the Backend Server which serves the Django API and the compiled Angular frontend",

|

"description": "Start the Backend Server which serves the Django API and the compiled Angular frontend",

|

||||||

"type": "shell",

|

"type": "shell",

|

||||||

"command": "pipenv run python manage.py runserver",

|

"command": "uv run python manage.py runserver",

|

||||||

"group": "build",

|

"group": "build",

|

||||||

"presentation": {

|

"presentation": {

|

||||||

"echo": true,

|

"echo": true,

|

||||||

@ -99,7 +99,7 @@

|

|||||||

"label": "Maintenance: manage.py migrate",

|

"label": "Maintenance: manage.py migrate",

|

||||||

"description": "Apply database migrations",

|

"description": "Apply database migrations",

|

||||||

"type": "shell",

|

"type": "shell",

|

||||||

"command": "pipenv run python manage.py migrate",

|

"command": "uv run python manage.py migrate",

|

||||||

"group": "none",

|

"group": "none",

|

||||||

"presentation": {

|

"presentation": {

|

||||||

"echo": true,

|

"echo": true,

|

||||||

@ -118,7 +118,7 @@

|

|||||||

"label": "Maintenance: Build Documentation",

|

"label": "Maintenance: Build Documentation",

|

||||||

"description": "Build the documentation with MkDocs",

|

"description": "Build the documentation with MkDocs",

|

||||||

"type": "shell",

|

"type": "shell",

|

||||||

"command": "pipenv run mkdocs build --config-file mkdocs.yml && pipenv run mkdocs serve",

|

"command": "uv run mkdocs build --config-file mkdocs.yml && uv run mkdocs serve",

|

||||||

"group": "none",

|

"group": "none",

|

||||||

"presentation": {

|

"presentation": {

|

||||||

"echo": true,

|

"echo": true,

|

||||||

@ -137,7 +137,7 @@

|

|||||||

"label": "Maintenance: manage.py createsuperuser",

|

"label": "Maintenance: manage.py createsuperuser",

|

||||||

"description": "Create a superuser",

|

"description": "Create a superuser",

|

||||||

"type": "shell",

|

"type": "shell",

|

||||||

"command": "pipenv run python manage.py createsuperuser",

|

"command": "uv run python manage.py createsuperuser",

|

||||||

"group": "none",

|

"group": "none",

|

||||||

"presentation": {

|

"presentation": {

|

||||||

"echo": true,

|

"echo": true,

|

||||||

@ -156,7 +156,7 @@

|

|||||||

"label": "Maintenance: recreate .venv",

|

"label": "Maintenance: recreate .venv",

|

||||||

"description": "Recreate the python virtual environment and install python dependencies",

|

"description": "Recreate the python virtual environment and install python dependencies",

|

||||||

"type": "shell",

|

"type": "shell",

|

||||||

"command": "rm -R -v .venv/* || pipenv install --dev",

|

"command": "rm -R -v .venv/* || uv install --dev",

|

||||||

"group": "none",

|

"group": "none",

|

||||||

"presentation": {

|

"presentation": {

|

||||||

"echo": true,

|

"echo": true,

|

||||||

@ -173,8 +173,8 @@

|

|||||||

},

|

},

|

||||||

{

|

{

|

||||||

"label": "Maintenance: Install Frontend Dependencies",

|

"label": "Maintenance: Install Frontend Dependencies",

|

||||||

"description": "Install frontend (npm) dependencies",

|

"description": "Install frontend (pnpm) dependencies",

|

||||||

"type": "npm",

|

"type": "pnpm",

|

||||||

"script": "install",

|

"script": "install",

|

||||||

"path": "src-ui",

|

"path": "src-ui",

|

||||||

"group": "clean",

|

"group": "clean",

|

||||||

@ -185,7 +185,7 @@

|

|||||||

"description": "Clean install frontend dependencies and build the frontend for production",

|

"description": "Clean install frontend dependencies and build the frontend for production",

|

||||||

"label": "Maintenance: Compile frontend for production",

|

"label": "Maintenance: Compile frontend for production",

|

||||||

"type": "shell",

|

"type": "shell",

|

||||||

"command": "npm ci && ./node_modules/.bin/ng build --configuration production",

|

"command": "pnpm install && ./node_modules/.bin/ng build --configuration production",

|

||||||

"group": "none",

|

"group": "none",

|

||||||

"presentation": {

|

"presentation": {

|

||||||

"echo": true,

|

"echo": true,

|

||||||

|

|||||||

@ -26,3 +26,5 @@

|

|||||||

./dist

|

./dist

|

||||||

./scripts

|

./scripts

|

||||||

./resources

|

./resources

|

||||||

|

# Other stuff

|

||||||

|

**/*.drawio.png

|

||||||

|

|||||||

@ -27,9 +27,6 @@ indent_style = space

|

|||||||

[*.md]

|

[*.md]

|

||||||

indent_style = space

|

indent_style = space

|

||||||

|

|

||||||

[Pipfile.lock]

|

|

||||||

indent_style = space

|

|

||||||

|

|

||||||

# Tests don't get a line width restriction. It's still a good idea to follow

|

# Tests don't get a line width restriction. It's still a good idea to follow

|

||||||

# the 79 character rule, but in the interests of clarity, tests often need to

|

# the 79 character rule, but in the interests of clarity, tests often need to

|

||||||

# violate it.

|

# violate it.

|

||||||

|

|||||||

1

.github/FUNDING.yml

vendored

Normal file

1

.github/FUNDING.yml

vendored

Normal file

@ -0,0 +1 @@

|

|||||||

|

github: [shamoon, stumpylog]

|

||||||

4

.github/ISSUE_TEMPLATE/config.yml

vendored

4

.github/ISSUE_TEMPLATE/config.yml

vendored

@ -2,10 +2,10 @@ blank_issues_enabled: false

|

|||||||

contact_links:

|

contact_links:

|

||||||

- name: 🤔 Questions and Help

|

- name: 🤔 Questions and Help

|

||||||

url: https://github.com/paperless-ngx/paperless-ngx/discussions

|

url: https://github.com/paperless-ngx/paperless-ngx/discussions

|

||||||

about: This issue tracker is not for support questions. Please refer to our Discussions.

|

about: General questions or support for using Paperless-ngx.

|

||||||

- name: 💬 Chat

|

- name: 💬 Chat

|

||||||

url: https://matrix.to/#/#paperlessngx:matrix.org

|

url: https://matrix.to/#/#paperlessngx:matrix.org

|

||||||

about: Want to discuss Paperless-ngx with others? Check out our chat.

|

about: Want to discuss Paperless-ngx with others? Check out our chat.

|

||||||

- name: 🚀 Feature Request

|

- name: 🚀 Feature Request

|

||||||

url: https://github.com/paperless-ngx/paperless-ngx/discussions/new?category=feature-requests

|

url: https://github.com/paperless-ngx/paperless-ngx/discussions/new?category=feature-requests

|

||||||

about: Remember to search for existing feature requests and "up-vote" any you like

|

about: Remember to search for existing feature requests and "up-vote" those that you like.

|

||||||

|

|||||||

66

.github/dependabot.yml

vendored

66

.github/dependabot.yml

vendored

@ -1,12 +1,15 @@

|

|||||||

# https://docs.github.com/en/code-security/supply-chain-security/keeping-your-dependencies-updated-automatically/configuration-options-for-dependency-updates#package-ecosystem

|

# Please see the documentation for all configuration options:

|

||||||

|

# https://docs.github.com/github/administering-a-repository/configuration-options-for-dependency-updates

|

||||||

|

|

||||||

version: 2

|

version: 2

|

||||||

|

# Required for uv support for now

|

||||||

|

enable-beta-ecosystems: true

|

||||||

updates:

|

updates:

|

||||||

|

|

||||||

# Enable version updates for npm

|

# Enable version updates for pnpm

|

||||||

- package-ecosystem: "npm"

|

- package-ecosystem: "npm"

|

||||||

target-branch: "dev"

|

target-branch: "dev"

|

||||||

# Look for `package.json` and `lock` files in the `/src-ui` directory

|

# Look for `pnpm-lock.yaml` file in the `/src-ui` directory

|

||||||

directory: "/src-ui"

|

directory: "/src-ui"

|

||||||

open-pull-requests-limit: 10

|

open-pull-requests-limit: 10

|

||||||

schedule:

|

schedule:

|

||||||

@ -34,9 +37,8 @@ updates:

|

|||||||

- "eslint"

|

- "eslint"

|

||||||

|

|

||||||

# Enable version updates for Python

|

# Enable version updates for Python

|

||||||

- package-ecosystem: "pip"

|

- package-ecosystem: "uv"

|

||||||

target-branch: "dev"

|

target-branch: "dev"

|

||||||

# Look for a `Pipfile` in the `root` directory

|

|

||||||

directory: "/"

|

directory: "/"

|

||||||

# Check for updates once a week

|

# Check for updates once a week

|

||||||

schedule:

|

schedule:

|

||||||

@ -47,14 +49,13 @@ updates:

|

|||||||

# Add reviewers

|

# Add reviewers

|

||||||

reviewers:

|

reviewers:

|

||||||

- "paperless-ngx/backend"

|

- "paperless-ngx/backend"

|

||||||

ignore:

|

|

||||||

- dependency-name: "uvicorn"

|

|

||||||

groups:

|

groups:

|

||||||

development:

|

development:

|

||||||

patterns:

|

patterns:

|

||||||

- "*pytest*"

|

- "*pytest*"

|

||||||

- "ruff"

|

- "ruff"

|

||||||

- "mkdocs-material"

|

- "mkdocs-material"

|

||||||

|

- "pre-commit*"

|

||||||

django:

|

django:

|

||||||

patterns:

|

patterns:

|

||||||

- "*django*"

|

- "*django*"

|

||||||

@ -65,6 +66,10 @@ updates:

|

|||||||

update-types:

|

update-types:

|

||||||

- "minor"

|

- "minor"

|

||||||

- "patch"

|

- "patch"

|

||||||

|

pre-built:

|

||||||

|

patterns:

|

||||||

|

- psycopg*

|

||||||

|

- zxing-cpp

|

||||||

|

|

||||||

# Enable updates for GitHub Actions

|

# Enable updates for GitHub Actions

|

||||||

- package-ecosystem: "github-actions"

|

- package-ecosystem: "github-actions"

|

||||||

@ -85,3 +90,50 @@ updates:

|

|||||||

- "major"

|

- "major"

|

||||||

- "minor"

|

- "minor"

|

||||||

- "patch"

|

- "patch"

|

||||||

|

|

||||||

|

# Update Dockerfile in root directory

|

||||||

|

- package-ecosystem: "docker"

|

||||||

|

directory: "/"

|

||||||

|

schedule:

|

||||||

|

interval: "weekly"

|

||||||

|

open-pull-requests-limit: 5

|

||||||

|

reviewers:

|

||||||

|

- "paperless-ngx/ci-cd"

|

||||||

|

labels:

|

||||||

|

- "ci-cd"

|

||||||

|

- "dependencies"

|

||||||

|

commit-message:

|

||||||

|

prefix: "docker"

|

||||||

|

include: "scope"

|

||||||

|

|

||||||

|

# Update Docker Compose files in docker/compose directory

|

||||||

|

- package-ecosystem: "docker-compose"

|

||||||

|

directory: "/docker/compose/"

|

||||||

|

schedule:

|

||||||

|

interval: "weekly"

|

||||||

|

open-pull-requests-limit: 5

|

||||||

|

reviewers:

|

||||||

|

- "paperless-ngx/ci-cd"

|

||||||

|

labels:

|

||||||

|

- "ci-cd"

|

||||||

|

- "dependencies"

|

||||||

|

commit-message:

|

||||||

|

prefix: "docker-compose"

|

||||||

|

include: "scope"

|

||||||

|

groups:

|

||||||

|

# Individual groups for each image

|

||||||

|

gotenberg:

|

||||||

|

patterns:

|

||||||

|

- "docker.io/gotenberg/gotenberg*"

|

||||||

|

tika:

|

||||||

|

patterns:

|

||||||

|

- "docker.io/apache/tika*"

|

||||||

|

redis:

|

||||||

|

patterns:

|

||||||

|

- "docker.io/library/redis*"

|

||||||

|

mariadb:

|

||||||

|

patterns:

|

||||||

|

- "docker.io/library/mariadb*"

|

||||||

|

postgres:

|

||||||

|

patterns:

|

||||||

|

- "docker.io/library/postgres*"

|

||||||

|

|||||||

254

.github/workflows/ci.yml

vendored

254

.github/workflows/ci.yml

vendored

@ -14,9 +14,7 @@ on:

|

|||||||

- 'translations**'

|

- 'translations**'

|

||||||

|

|

||||||

env:

|

env:

|

||||||

# This is the version of pipenv all the steps will use

|

DEFAULT_UV_VERSION: "0.6.x"

|

||||||

# If changing this, change Dockerfile

|

|

||||||

DEFAULT_PIP_ENV_VERSION: "2024.4.0"

|

|

||||||

# This is the default version of Python to use in most steps which aren't specific

|

# This is the default version of Python to use in most steps which aren't specific

|

||||||

DEFAULT_PYTHON_VERSION: "3.11"

|

DEFAULT_PYTHON_VERSION: "3.11"

|

||||||

|

|

||||||

@ -59,24 +57,25 @@ jobs:

|

|||||||

uses: actions/setup-python@v5

|

uses: actions/setup-python@v5

|

||||||

with:

|

with:

|

||||||

python-version: ${{ env.DEFAULT_PYTHON_VERSION }}

|

python-version: ${{ env.DEFAULT_PYTHON_VERSION }}

|

||||||

cache: "pipenv"

|

|

||||||

cache-dependency-path: 'Pipfile.lock'

|

|

||||||

-

|

-

|

||||||

name: Install pipenv

|

name: Install uv

|

||||||

run: |

|

uses: astral-sh/setup-uv@v5

|

||||||

pip install --user pipenv==${{ env.DEFAULT_PIP_ENV_VERSION }}

|

with:

|

||||||

|

version: ${{ env.DEFAULT_UV_VERSION }}

|

||||||

|

enable-cache: true

|

||||||

|

python-version: ${{ env.DEFAULT_PYTHON_VERSION }}

|

||||||

-

|

-

|

||||||

name: Install dependencies

|

name: Install Python dependencies

|

||||||

run: |

|

run: |

|

||||||

pipenv --python ${{ steps.setup-python.outputs.python-version }} sync --dev

|

uv sync --python ${{ steps.setup-python.outputs.python-version }} --dev --frozen

|

||||||

-

|

|

||||||

name: List installed Python dependencies

|

|

||||||

run: |

|

|

||||||

pipenv --python ${{ steps.setup-python.outputs.python-version }} run pip list

|

|

||||||

-

|

-

|

||||||

name: Make documentation

|

name: Make documentation

|

||||||

run: |

|

run: |

|

||||||

pipenv --python ${{ steps.setup-python.outputs.python-version }} run mkdocs build --config-file ./mkdocs.yml

|

uv run \

|

||||||

|

--python ${{ steps.setup-python.outputs.python-version }} \

|

||||||

|

--dev \

|

||||||

|

--frozen \

|

||||||

|

mkdocs build --config-file ./mkdocs.yml

|

||||||

-

|

-

|

||||||

name: Deploy documentation

|

name: Deploy documentation

|

||||||

if: github.event_name == 'push' && github.ref == 'refs/heads/main'

|

if: github.event_name == 'push' && github.ref == 'refs/heads/main'

|

||||||

@ -84,7 +83,11 @@ jobs:

|

|||||||

echo "docs.paperless-ngx.com" > "${{ github.workspace }}/docs/CNAME"

|

echo "docs.paperless-ngx.com" > "${{ github.workspace }}/docs/CNAME"

|

||||||

git config --global user.name "${{ github.actor }}"

|

git config --global user.name "${{ github.actor }}"

|

||||||

git config --global user.email "${{ github.actor }}@users.noreply.github.com"

|

git config --global user.email "${{ github.actor }}@users.noreply.github.com"

|

||||||

pipenv --python ${{ steps.setup-python.outputs.python-version }} run mkdocs gh-deploy --force --no-history

|

uv run \

|

||||||

|

--python ${{ steps.setup-python.outputs.python-version }} \

|

||||||

|

--dev \

|

||||||

|

--frozen \

|

||||||

|

mkdocs gh-deploy --force --no-history

|

||||||

-

|

-

|

||||||

name: Upload artifact

|

name: Upload artifact

|

||||||

uses: actions/upload-artifact@v4

|

uses: actions/upload-artifact@v4

|

||||||

@ -117,12 +120,13 @@ jobs:

|

|||||||

uses: actions/setup-python@v5

|

uses: actions/setup-python@v5

|

||||||

with:

|

with:

|

||||||

python-version: "${{ matrix.python-version }}"

|

python-version: "${{ matrix.python-version }}"

|

||||||

cache: "pipenv"

|

|

||||||

cache-dependency-path: 'Pipfile.lock'

|

|

||||||

-

|

-

|

||||||

name: Install pipenv

|

name: Install uv

|

||||||

run: |

|

uses: astral-sh/setup-uv@v5

|

||||||

pip install --user pipenv==${{ env.DEFAULT_PIP_ENV_VERSION }}

|

with:

|

||||||

|

version: ${{ env.DEFAULT_UV_VERSION }}

|

||||||

|

enable-cache: true

|

||||||

|

python-version: ${{ steps.setup-python.outputs.python-version }}

|

||||||

-

|

-

|

||||||

name: Install system dependencies

|

name: Install system dependencies

|

||||||

run: |

|

run: |

|

||||||

@ -131,16 +135,18 @@ jobs:

|

|||||||

-

|

-

|

||||||

name: Configure ImageMagick

|

name: Configure ImageMagick

|

||||||

run: |

|

run: |

|

||||||

sudo cp docker/imagemagick-policy.xml /etc/ImageMagick-6/policy.xml

|

sudo cp docker/rootfs/etc/ImageMagick-6/paperless-policy.xml /etc/ImageMagick-6/policy.xml

|

||||||

-

|

-

|

||||||

name: Install Python dependencies

|

name: Install Python dependencies

|

||||||

run: |

|

run: |

|

||||||

pipenv --python ${{ steps.setup-python.outputs.python-version }} run python --version

|

uv sync \

|

||||||

pipenv --python ${{ steps.setup-python.outputs.python-version }} sync --dev

|

--python ${{ steps.setup-python.outputs.python-version }} \

|

||||||

|

--group testing \

|

||||||

|

--frozen

|

||||||

-

|

-

|

||||||

name: List installed Python dependencies

|

name: List installed Python dependencies

|

||||||

run: |

|

run: |

|

||||||

pipenv --python ${{ steps.setup-python.outputs.python-version }} run pip list

|

uv pip list

|

||||||

-

|

-

|

||||||

name: Tests

|

name: Tests

|

||||||

env:

|

env:

|

||||||

@ -150,17 +156,26 @@ jobs:

|

|||||||

PAPERLESS_MAIL_TEST_USER: ${{ secrets.TEST_MAIL_USER }}

|

PAPERLESS_MAIL_TEST_USER: ${{ secrets.TEST_MAIL_USER }}

|

||||||

PAPERLESS_MAIL_TEST_PASSWD: ${{ secrets.TEST_MAIL_PASSWD }}

|

PAPERLESS_MAIL_TEST_PASSWD: ${{ secrets.TEST_MAIL_PASSWD }}

|

||||||

run: |

|

run: |

|

||||||

cd src/

|

uv run \

|

||||||

pipenv --python ${{ steps.setup-python.outputs.python-version }} run pytest -ra

|

--python ${{ steps.setup-python.outputs.python-version }} \

|

||||||

|

--dev \

|

||||||

|

--frozen \

|

||||||

|

pytest

|

||||||

-

|

-

|

||||||

name: Upload coverage

|

name: Upload backend test results to Codecov

|

||||||

if: ${{ matrix.python-version == env.DEFAULT_PYTHON_VERSION }}

|

if: always()

|

||||||

uses: actions/upload-artifact@v4

|

uses: codecov/test-results-action@v1

|

||||||

with:

|

with:

|

||||||

name: backend-coverage-report

|

token: ${{ secrets.CODECOV_TOKEN }}

|

||||||

path: src/coverage.xml

|

flags: backend-python-${{ matrix.python-version }}

|

||||||

retention-days: 7

|

files: junit.xml

|

||||||

if-no-files-found: warn

|

-

|

||||||

|

name: Upload backend coverage to Codecov

|

||||||

|

uses: codecov/codecov-action@v5

|

||||||

|

with:

|

||||||

|

token: ${{ secrets.CODECOV_TOKEN }}

|

||||||

|

flags: backend-python-${{ matrix.python-version }}

|

||||||

|

files: coverage.xml

|

||||||

-

|

-

|

||||||

name: Stop containers

|

name: Stop containers

|

||||||

if: always()

|

if: always()

|

||||||

@ -168,42 +183,46 @@ jobs:

|

|||||||

docker compose --file ${{ github.workspace }}/docker/compose/docker-compose.ci-test.yml logs

|

docker compose --file ${{ github.workspace }}/docker/compose/docker-compose.ci-test.yml logs

|

||||||

docker compose --file ${{ github.workspace }}/docker/compose/docker-compose.ci-test.yml down

|

docker compose --file ${{ github.workspace }}/docker/compose/docker-compose.ci-test.yml down

|

||||||

|

|

||||||

install-frontend-depedendencies:

|

install-frontend-dependencies:

|

||||||

name: "Install Frontend Dependencies"

|

name: "Install Frontend Dependencies"

|

||||||

runs-on: ubuntu-24.04

|

runs-on: ubuntu-24.04

|

||||||

needs:

|

needs:

|

||||||

- pre-commit

|

- pre-commit

|

||||||

steps:

|

steps:

|

||||||

- uses: actions/checkout@v4

|

- uses: actions/checkout@v4

|

||||||

|

- name: Install pnpm

|

||||||

|

uses: pnpm/action-setup@v4

|

||||||

|

with:

|

||||||

|

version: 10

|

||||||

-

|

-

|

||||||

name: Use Node.js 20

|

name: Use Node.js 20

|

||||||

uses: actions/setup-node@v4

|

uses: actions/setup-node@v4

|

||||||

with:

|

with:

|

||||||

node-version: 20.x

|

node-version: 20.x

|

||||||

cache: 'npm'

|

cache: 'pnpm'

|

||||||

cache-dependency-path: 'src-ui/package-lock.json'

|

cache-dependency-path: 'src-ui/pnpm-lock.yaml'

|

||||||

- name: Cache frontend dependencies

|

- name: Cache frontend dependencies

|

||||||

id: cache-frontend-deps

|

id: cache-frontend-deps

|

||||||

uses: actions/cache@v4

|

uses: actions/cache@v4

|

||||||

with:

|

with:

|

||||||

path: |

|

path: |

|

||||||

~/.npm

|

~/.pnpm-store

|

||||||

~/.cache

|

~/.cache

|

||||||

key: ${{ runner.os }}-frontenddeps-${{ hashFiles('src-ui/package-lock.json') }}

|

key: ${{ runner.os }}-frontenddeps-${{ hashFiles('src-ui/pnpm-lock.yaml') }}

|

||||||

-

|

-

|

||||||

name: Install dependencies

|

name: Install dependencies

|

||||||

if: steps.cache-frontend-deps.outputs.cache-hit != 'true'

|

if: steps.cache-frontend-deps.outputs.cache-hit != 'true'

|

||||||

run: cd src-ui && npm ci

|

run: cd src-ui && pnpm install

|

||||||

-

|

-

|

||||||

name: Install Playwright

|

name: Install Playwright

|

||||||

if: steps.cache-frontend-deps.outputs.cache-hit != 'true'

|

if: steps.cache-frontend-deps.outputs.cache-hit != 'true'

|

||||||

run: cd src-ui && npx playwright install --with-deps

|

run: cd src-ui && pnpm playwright install --with-deps

|

||||||

|

|

||||||

tests-frontend:

|

tests-frontend:

|

||||||

name: "Frontend Tests (Node ${{ matrix.node-version }} - ${{ matrix.shard-index }}/${{ matrix.shard-count }})"

|

name: "Frontend Tests (Node ${{ matrix.node-version }} - ${{ matrix.shard-index }}/${{ matrix.shard-count }})"

|

||||||

runs-on: ubuntu-24.04

|

runs-on: ubuntu-24.04

|

||||||

needs:

|

needs:

|

||||||

- install-frontend-depedendencies

|

- install-frontend-dependencies

|

||||||

strategy:

|

strategy:

|

||||||

fail-fast: false

|

fail-fast: false

|

||||||

matrix:

|

matrix:

|

||||||

@ -212,124 +231,88 @@ jobs:

|

|||||||

shard-count: [4]

|

shard-count: [4]

|

||||||

steps:

|

steps:

|

||||||

- uses: actions/checkout@v4

|

- uses: actions/checkout@v4

|

||||||

|

- name: Install pnpm

|

||||||

|

uses: pnpm/action-setup@v4

|

||||||

|

with:

|

||||||

|

version: 10

|

||||||

-

|

-

|

||||||

name: Use Node.js 20

|

name: Use Node.js 20

|

||||||

uses: actions/setup-node@v4

|

uses: actions/setup-node@v4

|

||||||

with:

|

with:

|

||||||

node-version: 20.x

|

node-version: 20.x

|

||||||

cache: 'npm'

|

cache: 'pnpm'

|

||||||

cache-dependency-path: 'src-ui/package-lock.json'

|

cache-dependency-path: 'src-ui/pnpm-lock.yaml'

|

||||||

- name: Cache frontend dependencies

|

- name: Cache frontend dependencies

|

||||||

id: cache-frontend-deps

|

id: cache-frontend-deps

|

||||||

uses: actions/cache@v4

|

uses: actions/cache@v4

|

||||||

with:

|

with:

|

||||||

path: |

|

path: |

|

||||||

~/.npm

|

~/.pnpm-store

|

||||||

~/.cache

|

~/.cache

|

||||||

key: ${{ runner.os }}-frontenddeps-${{ hashFiles('src-ui/package-lock.json') }}

|

key: ${{ runner.os }}-frontenddeps-${{ hashFiles('src-ui/pnpm-lock.yaml') }}

|

||||||

- name: Re-link Angular cli

|

- name: Re-link Angular cli

|

||||||

run: cd src-ui && npm link @angular/cli

|

run: cd src-ui && pnpm link @angular/cli

|

||||||

-

|

-

|

||||||

name: Linting checks

|

name: Linting checks

|

||||||

run: cd src-ui && npm run lint

|

run: cd src-ui && pnpm run lint

|

||||||

-

|

-

|

||||||

name: Run Jest unit tests

|

name: Run Jest unit tests

|

||||||

run: cd src-ui && npm run test -- --max-workers=2 --shard=${{ matrix.shard-index }}/${{ matrix.shard-count }}

|

run: cd src-ui && pnpm run test --max-workers=2 --shard=${{ matrix.shard-index }}/${{ matrix.shard-count }}

|

||||||

-

|

|

||||||

name: Upload Jest coverage

|

|

||||||

if: always()

|

|

||||||

uses: actions/upload-artifact@v4

|

|

||||||

with:

|

|

||||||

name: jest-coverage-report-${{ matrix.shard-index }}

|

|

||||||

path: |

|

|

||||||

src-ui/coverage/coverage-final.json

|

|

||||||

src-ui/coverage/lcov.info

|

|

||||||

src-ui/coverage/clover.xml

|

|

||||||

retention-days: 7

|

|

||||||

if-no-files-found: warn

|

|

||||||

-

|

-

|

||||||

name: Run Playwright e2e tests

|

name: Run Playwright e2e tests

|

||||||

run: cd src-ui && npx playwright test --shard ${{ matrix.shard-index }}/${{ matrix.shard-count }}

|

run: cd src-ui && pnpm exec playwright test --shard ${{ matrix.shard-index }}/${{ matrix.shard-count }}

|

||||||

-

|

-

|

||||||

name: Upload Playwright test results

|

name: Upload frontend test results to Codecov

|

||||||

|

uses: codecov/test-results-action@v1

|

||||||

if: always()

|

if: always()

|

||||||

uses: actions/upload-artifact@v4

|

|

||||||

with:

|

with:

|

||||||

name: playwright-report-${{ matrix.shard-index }}

|

token: ${{ secrets.CODECOV_TOKEN }}

|

||||||

path: src-ui/playwright-report

|

flags: frontend-node-${{ matrix.node-version }}

|

||||||

retention-days: 7

|

directory: src-ui/

|

||||||

|

|

||||||

tests-coverage-upload:

|

|

||||||

name: "Upload to Codecov"

|

|

||||||

runs-on: ubuntu-24.04

|

|

||||||

needs:

|

|

||||||

- tests-backend

|

|

||||||

- tests-frontend

|

|

||||||

steps:

|

|

||||||

-

|

|

||||||

uses: actions/checkout@v4

|

|

||||||

-

|

|

||||||

name: Download frontend jest coverage

|

|

||||||

uses: actions/download-artifact@v4

|

|

||||||

with:

|

|

||||||

path: src-ui/coverage/

|

|

||||||

pattern: jest-coverage-report-*

|

|

||||||

-

|

|

||||||

name: Download frontend playwright coverage

|

|

||||||

uses: actions/download-artifact@v4

|

|

||||||

with:

|

|

||||||

path: src-ui/coverage/

|

|

||||||

pattern: playwright-report-*

|

|

||||||

merge-multiple: true

|

|

||||||

-

|

-

|

||||||

name: Upload frontend coverage to Codecov

|

name: Upload frontend coverage to Codecov

|

||||||

uses: codecov/codecov-action@v5

|

uses: codecov/codecov-action@v5

|

||||||

with:

|

with:

|

||||||

# not required for public repos, but intermittently fails otherwise

|

|

||||||

token: ${{ secrets.CODECOV_TOKEN }}

|

token: ${{ secrets.CODECOV_TOKEN }}

|

||||||

flags: frontend

|

flags: frontend-node-${{ matrix.node-version }}

|

||||||

directory: src-ui/coverage/

|

directory: src-ui/coverage/

|

||||||

# dont include backend coverage files here

|

|

||||||

files: '!coverage.xml'

|

frontend-bundle-analysis:

|

||||||

|

name: "Frontend Bundle Analysis"

|

||||||

|

runs-on: ubuntu-24.04

|

||||||

|

needs:

|

||||||

|

- tests-frontend

|

||||||

|

steps:

|

||||||

|

- uses: actions/checkout@v4

|

||||||

-

|

-

|

||||||

name: Download backend coverage

|

name: Install pnpm

|

||||||

uses: actions/download-artifact@v4

|

uses: pnpm/action-setup@v4

|

||||||

with:

|

with:

|

||||||

name: backend-coverage-report

|

version: 10

|

||||||

path: src/

|

|

||||||

-

|

|

||||||

name: Upload coverage to Codecov

|

|

||||||

uses: codecov/codecov-action@v5

|

|

||||||

with:

|

|

||||||

# not required for public repos, but intermittently fails otherwise

|

|

||||||

token: ${{ secrets.CODECOV_TOKEN }}

|

|

||||||

# future expansion

|

|

||||||

flags: backend

|

|

||||||

directory: src/

|

|

||||||

-

|

-

|

||||||

name: Use Node.js 20

|

name: Use Node.js 20

|

||||||

uses: actions/setup-node@v4

|

uses: actions/setup-node@v4

|

||||||

with:

|

with:

|

||||||

node-version: 20.x

|

node-version: 20.x

|

||||||

cache: 'npm'

|

cache: 'pnpm'

|

||||||

cache-dependency-path: 'src-ui/package-lock.json'

|

cache-dependency-path: 'src-ui/pnpm-lock.yaml'

|

||||||

-

|

-

|

||||||

name: Cache frontend dependencies

|

name: Cache frontend dependencies

|

||||||

id: cache-frontend-deps

|

id: cache-frontend-deps

|

||||||

uses: actions/cache@v4

|

uses: actions/cache@v4

|

||||||

with:

|

with:

|

||||||

path: |

|

path: |

|

||||||

~/.npm

|

~/.pnpm-store

|

||||||

~/.cache

|

~/.cache

|

||||||

key: ${{ runner.os }}-frontenddeps-${{ hashFiles('src-ui/package-lock.json') }}

|

key: ${{ runner.os }}-frontenddeps-${{ hashFiles('src-ui/package-lock.json') }}

|

||||||

-

|

-

|

||||||

name: Re-link Angular cli

|

name: Re-link Angular cli

|

||||||

run: cd src-ui && npm link @angular/cli

|

run: cd src-ui && pnpm link @angular/cli

|

||||||

-

|

-

|

||||||

name: Build frontend and upload analysis

|

name: Build frontend and upload analysis

|

||||||

env:

|

env:

|

||||||

CODECOV_TOKEN: ${{ secrets.CODECOV_TOKEN }}

|

CODECOV_TOKEN: ${{ secrets.CODECOV_TOKEN }}

|

||||||

run: cd src-ui && ng build --configuration=production

|

run: cd src-ui && pnpm run build --configuration=production

|

||||||

|

|

||||||

build-docker-image:

|

build-docker-image:

|

||||||

name: Build Docker image for ${{ github.ref_name }}

|

name: Build Docker image for ${{ github.ref_name }}

|

||||||

@ -472,16 +455,17 @@ jobs:

|

|||||||

uses: actions/setup-python@v5

|

uses: actions/setup-python@v5

|

||||||

with:

|

with:

|

||||||

python-version: ${{ env.DEFAULT_PYTHON_VERSION }}

|

python-version: ${{ env.DEFAULT_PYTHON_VERSION }}

|

||||||

cache: "pipenv"

|

|

||||||

cache-dependency-path: 'Pipfile.lock'

|

|

||||||

-

|

-

|

||||||

name: Install pipenv + tools

|

name: Install uv

|

||||||

run: |

|

uses: astral-sh/setup-uv@v5

|

||||||

pip install --upgrade --user pipenv==${{ env.DEFAULT_PIP_ENV_VERSION }} setuptools wheel

|

with:

|

||||||

|

version: ${{ env.DEFAULT_UV_VERSION }}

|

||||||

|

enable-cache: true

|

||||||

|

python-version: ${{ steps.setup-python.outputs.python-version }}

|

||||||

-

|

-

|

||||||

name: Install Python dependencies

|

name: Install Python dependencies

|

||||||

run: |

|

run: |

|

||||||

pipenv --python ${{ steps.setup-python.outputs.python-version }} sync --dev

|

uv sync --python ${{ steps.setup-python.outputs.python-version }} --dev --frozen

|

||||||

-

|

-

|

||||||

name: Install system dependencies

|

name: Install system dependencies

|

||||||

run: |

|

run: |

|

||||||

@ -502,17 +486,21 @@ jobs:

|

|||||||

-

|

-

|

||||||

name: Generate requirements file

|

name: Generate requirements file

|

||||||

run: |

|

run: |

|

||||||

pipenv --python ${{ steps.setup-python.outputs.python-version }} requirements > requirements.txt

|

uv export --quiet --no-dev --all-extras --format requirements-txt --output-file requirements.txt

|

||||||

-

|

-

|

||||||

name: Compile messages

|

name: Compile messages

|

||||||

run: |

|

run: |

|

||||||

cd src/

|

cd src/

|

||||||

pipenv --python ${{ steps.setup-python.outputs.python-version }} run python3 manage.py compilemessages

|

uv run \

|

||||||

|

--python ${{ steps.setup-python.outputs.python-version }} \

|

||||||

|

manage.py compilemessages