Compare commits

407 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

d5876cc97d | ||

|

|

6235edf845 | ||

|

|

9b00a98de3 | ||

|

|

07e18e773a | ||

|

|

fc560b8c04 | ||

|

|

c94f4dcc75 | ||

|

|

9173bca3c7 | ||

|

|

f2cf3a6a0f | ||

|

|

d6346706db | ||

|

|

48738dab9f | ||

|

|

11db87fa11 | ||

|

|

1f7990d742 | ||

|

|

52b32fddc9 | ||

|

|

81a8cb45d7 | ||

|

|

9c583fe9f3 | ||

|

|

4585308e7f | ||

|

|

4386b09eb1 | ||

|

|

f96e7f7895 | ||

|

|

8218b1aa51 | ||

|

|

0559204be4 | ||

|

|

bccac5017c | ||

|

|

3e8038577d | ||

|

|

05b7bcd199 | ||

|

|

3a2a180607 | ||

|

|

9690a00761 | ||

|

|

3532745579 | ||

|

|

24bdc07e14 | ||

|

|

528b572855 | ||

|

|

91ddfaa065 | ||

|

|

ac0cda861e | ||

|

|

a752a4a91a | ||

|

|

7e1d59377a | ||

|

|

7357471b9e | ||

|

|

bd75a65866 | ||

|

|

e65e27d11f | ||

|

|

12488c9634 | ||

|

|

61cd050e24 | ||

|

|

f018e8e54f | ||

|

|

a56a3eb86d | ||

|

|

2fe7df8ca0 | ||

|

|

873c98dddb | ||

|

|

ea287e0db2 | ||

|

|

4babfa1a5b | ||

|

|

aa2fc84d7f | ||

|

|

8d5ae64aff | ||

|

|

82f9dde055 | ||

|

|

c983e73d0f | ||

|

|

20a4a66a57 | ||

|

|

4ed1fff518 | ||

|

|

7223ea3c3f | ||

|

|

676c8f9fa7 | ||

|

|

00fd2268c5 | ||

|

|

3aafabba26 | ||

|

|

b733b32c1d | ||

|

|

4ba9514007 | ||

|

|

4505711e4f | ||

|

|

63c394fa31 | ||

|

|

27c72a7bc6 | ||

|

|

72af13e4e4 | ||

|

|

6c8ef8f044 | ||

|

|

9d4bebd569 | ||

|

|

101b7bb9bf | ||

|

|

52d6cf085d | ||

|

|

39ead59e45 | ||

|

|

015c49030b | ||

|

|

985b9428fe | ||

|

|

ea90bd3f84 | ||

|

|

fccc95254b | ||

|

|

e266e114a9 | ||

|

|

19faed3634 | ||

|

|

fcdcf62c2c | ||

|

|

68251b8be6 | ||

|

|

8e63388833 | ||

|

|

1f2079f65a | ||

|

|

f61fa06993 | ||

|

|

da1d3820ec | ||

|

|

f778d3a6e3 | ||

|

|

96a94c4ee9 | ||

|

|

b126c6b0ff | ||

|

|

1d162dc769 | ||

|

|

a1f257369d | ||

|

|

45e18d7094 | ||

|

|

d6fe17f4c6 | ||

|

|

93bed91937 | ||

|

|

10d22abd8f | ||

|

|

fdb50d0446 | ||

|

|

79bdd829ea | ||

|

|

1c4226d27c | ||

|

|

b437803321 | ||

|

|

b6a266a4f7 | ||

|

|

b7f1561217 | ||

|

|

abbd4d772c | ||

|

|

75ac8d2796 | ||

|

|

41f816a29b | ||

|

|

4a25e9655c | ||

|

|

d0252e8e44 | ||

|

|

73e62600c2 | ||

|

|

5c43041610 | ||

|

|

f56dafe7d9 | ||

|

|

7a1754fffd | ||

|

|

f8c6c07bb7 | ||

|

|

8fefafb844 | ||

|

|

d1a57b5d68 | ||

|

|

3adccc0bdb | ||

|

|

6058630360 | ||

|

|

81d92fb4ad | ||

|

|

40cb0190fc | ||

|

|

e5ebd84eca | ||

|

|

673b4cf911 | ||

|

|

ea1260c2ce | ||

|

|

7040d13f76 | ||

|

|

ed36070e92 | ||

|

|

c88a7646e5 | ||

|

|

d174390624 | ||

|

|

5bb90cb63d | ||

|

|

c4bbb71a3b | ||

|

|

7d6cae96f3 | ||

|

|

b1e616055e | ||

|

|

eec8f09d6f | ||

|

|

c68a6d78eb | ||

|

|

acd3cc5062 | ||

|

|

a55a915439 | ||

|

|

1f68223752 | ||

|

|

d6b626ec50 | ||

|

|

926016f1d8 | ||

|

|

8ea1cc24a7 | ||

|

|

08adb2f540 | ||

|

|

4333ee01bc | ||

|

|

46db0edd5d | ||

|

|

10d017ebe4 | ||

|

|

76a13dadb8 | ||

|

|

72750b9243 | ||

|

|

fba58f3bdd | ||

|

|

6662ca3467 | ||

|

|

6f1ed89e26 | ||

|

|

7e99e42924 | ||

|

|

5d01410dc0 | ||

|

|

9a47dba494 | ||

|

|

6edee78145 | ||

|

|

30eac99a61 | ||

|

|

ea6d040809 | ||

|

|

8e9d5caa37 | ||

|

|

122aa2b9f1 | ||

|

|

fb1da4834c | ||

|

|

96c7222269 | ||

|

|

1737e27b34 | ||

|

|

39f198138a | ||

|

|

c74bb84c83 | ||

|

|

07d06d9aee | ||

|

|

9cef689106 | ||

|

|

11a9c756b3 | ||

|

|

11accaff7f | ||

|

|

77aee832e4 | ||

|

|

4bde14368c | ||

|

|

151d85f2be | ||

|

|

e7c23cfb92 | ||

|

|

3f9ea7b971 | ||

|

|

998c3ef51b | ||

|

|

c85b6b425d | ||

|

|

273916973c | ||

|

|

5009bd022f | ||

|

|

73163d893f | ||

|

|

506af7c9c2 | ||

|

|

c90ed2da1d | ||

|

|

cebb8b9fa2 | ||

|

|

46aca10a72 | ||

|

|

6384c698ad | ||

|

|

abf01be889 | ||

|

|

1e4928d2a0 | ||

|

|

503be90932 | ||

|

|

b5d6a82cc3 | ||

|

|

c073ba5272 | ||

|

|

d1e317ce21 | ||

|

|

d4abeafb34 | ||

|

|

4d96551619 | ||

|

|

178361b247 | ||

|

|

40f8ba23a4 | ||

|

|

bef2d94374 | ||

|

|

f39c7654a0 | ||

|

|

e9fff764cb | ||

|

|

87e466c47c | ||

|

|

bd0b593c4a | ||

|

|

7a8142df2b | ||

|

|

bbe3084eda | ||

|

|

89d42bd078 | ||

|

|

93efaf7a38 | ||

|

|

398575c70c | ||

|

|

4e21fa4830 | ||

|

|

d2d2d9edaf | ||

|

|

771c8bbbe4 | ||

|

|

20eeda19b8 | ||

|

|

96c517d65c | ||

|

|

e70ad3d493 | ||

|

|

516bc48a33 | ||

|

|

7f97716ae9 | ||

|

|

5e40227bc3 | ||

|

|

5479942fc0 | ||

|

|

ce98019b49 | ||

|

|

9470154df2 | ||

|

|

5c59120c57 | ||

|

|

88736ff867 | ||

|

|

fd5b831979 | ||

|

|

3fcd1e2d7e | ||

|

|

2c81648d59 | ||

|

|

cd92c005e3 | ||

|

|

31c8cf020e | ||

|

|

e900a38983 | ||

|

|

7343a07ddd | ||

|

|

e20b4fb905 | ||

|

|

cbbc4d37d0 | ||

|

|

b140935843 | ||

|

|

9faf0a102e | ||

|

|

b747dd58c3 | ||

|

|

09e1b505e1 | ||

|

|

a6babffed8 | ||

|

|

0256e2dfbb | ||

|

|

7afa90b769 | ||

|

|

5796956235 | ||

|

|

3ca215e4dc | ||

|

|

16c4183333 | ||

|

|

6fe37678f2 | ||

|

|

b58188f805 | ||

|

|

f2a42ab6fe | ||

|

|

e236b7bf7b | ||

|

|

35004f434b | ||

|

|

75251ad694 | ||

|

|

870357968a | ||

|

|

a593798b4b | ||

|

|

4f070ba162 | ||

|

|

9517d27f40 | ||

|

|

35bb3dbcc2 | ||

|

|

06117929bb | ||

|

|

d1c8241947 | ||

|

|

4c38b28469 | ||

|

|

ad0f0a0b5d | ||

|

|

83746a9aeb | ||

|

|

6a36a4ec97 | ||

|

|

7e49d047b0 | ||

|

|

68cdeb7b3d | ||

|

|

76293084a4 | ||

|

|

e1cf2117f5 | ||

|

|

7d81de4edf | ||

|

|

37af5992c7 | ||

|

|

af4623e605 | ||

|

|

db8e116681 | ||

|

|

a8616ebfe2 | ||

|

|

a38d3bf7f8 | ||

|

|

1cb5bbd07d | ||

|

|

6edb5b912f | ||

|

|

ec20c7577e | ||

|

|

d6df9b3656 | ||

|

|

80a849fef7 | ||

|

|

bd67b53d50 | ||

|

|

e32ed09da3 | ||

|

|

c5632e5c04 | ||

|

|

4d2b71454d | ||

|

|

5cbb33b02b | ||

|

|

2c55aad6c0 | ||

|

|

1e039dcb32 | ||

|

|

6ca8da4858 | ||

|

|

67b492bcb7 | ||

|

|

360d1e2802 | ||

|

|

1cd76634a3 | ||

|

|

c65c5009e4 | ||

|

|

24fb6cefb9 | ||

|

|

d80e272b75 | ||

|

|

82f05e27c3 | ||

|

|

7a627e4ad8 | ||

|

|

73af9552ec | ||

|

|

e4854f2144 | ||

|

|

6f5c1ac4e1 | ||

|

|

22acc51284 | ||

|

|

a05644fc31 | ||

|

|

d1aa54caa9 | ||

|

|

e293f70a91 | ||

|

|

347986a2b3 | ||

|

|

ede274386b | ||

|

|

3e083354cc | ||

|

|

b2b4f6516a | ||

|

|

2ae702c7bb | ||

|

|

b748420a94 | ||

|

|

8a4546ce0d | ||

|

|

167412a003 | ||

|

|

e8d90b42a1 | ||

|

|

d8c7e9de5f | ||

|

|

2ac1b78a2c | ||

|

|

e8e38befb7 | ||

|

|

b30629dd60 | ||

|

|

f66d7e1c2d | ||

|

|

8417ac7eeb | ||

|

|

6342225b22 | ||

|

|

4460fb7004 | ||

|

|

6f635c74fc | ||

|

|

c82d45689c | ||

|

|

02e0543a02 | ||

|

|

fde0276d65 | ||

|

|

3d6289e4e1 | ||

|

|

5e55b971a8 | ||

|

|

0a43b84a96 | ||

|

|

dc74cc2db5 | ||

|

|

fc00a09318 | ||

|

|

19cf9d0b9a | ||

|

|

f81780cf88 | ||

|

|

c3a55c91dc | ||

|

|

482f02fbaa | ||

|

|

6bf7429ef6 | ||

|

|

4198de604f | ||

|

|

8c06dc2dd1 | ||

|

|

13b4610c1d | ||

|

|

0090128249 | ||

|

|

3153bbd6a8 | ||

|

|

7b1812a9be | ||

|

|

c647daace2 | ||

|

|

70dceb3b37 | ||

|

|

72b1ce5fe6 | ||

|

|

731942d855 | ||

|

|

058dad7ba7 | ||

|

|

fe43e5a717 | ||

|

|

34bab04310 | ||

|

|

18f7c4f31f | ||

|

|

3477b96d87 | ||

|

|

ac850b64aa | ||

|

|

279e421ad5 | ||

|

|

22c8049bed | ||

|

|

3f1392769d | ||

|

|

da71eab0ae | ||

|

|

2e0e6bb8d2 | ||

|

|

1f145c6cba | ||

|

|

c4d48181ee | ||

|

|

0c4ecad4a7 | ||

|

|

d25de5592a | ||

|

|

88fc35d8ea | ||

|

|

02730be871 | ||

|

|

c7876dbbe8 | ||

|

|

85fcb5fedf | ||

|

|

58cbfeb72a | ||

|

|

b2b6cbe9c8 | ||

|

|

4c05a511c2 | ||

|

|

b5bef13b46 | ||

|

|

bb47dc5e06 | ||

|

|

511f154e16 | ||

|

|

10ae2207df | ||

|

|

71df99ffb6 | ||

|

|

5eb26102d4 | ||

|

|

a7fa82a83f | ||

|

|

6ce27d225d | ||

|

|

819a0e1f57 | ||

|

|

702a60b7e7 | ||

|

|

057d5f149f | ||

|

|

d047dafd23 | ||

|

|

b449a7f6e2 | ||

|

|

f302874ae8 | ||

|

|

6af58203dd | ||

|

|

fa4924d5ba | ||

|

|

5b88ebf0e7 | ||

|

|

a0edc7d54d | ||

|

|

b876a0d0df | ||

|

|

27db4f7e51 | ||

|

|

426919fa9f | ||

|

|

e47c152b81 | ||

|

|

b7cb708053 | ||

|

|

7611c2b3d5 | ||

|

|

5f964830aa | ||

|

|

7ec4f906af | ||

|

|

b5f6c06b8b | ||

|

|

55e81ca4bb | ||

|

|

0f7bfc547a | ||

|

|

9525725c28 | ||

|

|

2a2196fa4d | ||

|

|

237efbcaa0 | ||

|

|

351cd06ef7 | ||

|

|

8b37160953 | ||

|

|

db64478d9f | ||

|

|

8bc2dfe4c6 | ||

|

|

3a427c9130 | ||

|

|

01da48693e | ||

|

|

499abd38f6 | ||

|

|

ca2a556259 | ||

|

|

7699a881d0 | ||

|

|

425f87618a | ||

|

|

701ef2f919 | ||

|

|

ce5c5f0837 | ||

|

|

75884285cf | ||

|

|

d7d9c1edc0 | ||

|

|

92db3fec2e | ||

|

|

69ea039e31 | ||

|

|

f640bef5fc | ||

|

|

88f9fb6fc8 | ||

|

|

855e9f6c83 | ||

|

|

e63e9e389e | ||

|

|

c646cd4977 | ||

|

|

6b53c0dc27 | ||

|

|

afb4e317f0 | ||

|

|

a698c69b4d | ||

|

|

4121876116 | ||

|

|

060d3011f7 | ||

|

|

1f0fea2937 | ||

|

|

92e178cc59 | ||

|

|

dc222cefd4 | ||

|

|

0defa9d0ba | ||

|

|

e3edb02090 | ||

|

|

a32625ca04 | ||

|

|

3c08fa9b33 | ||

|

|

e6526d3fd4 | ||

|

|

bee0867a2a | ||

|

|

1711030cb5 | ||

|

|

7b586e6857 |

1

.gitattributes

vendored

Normal file

@@ -0,0 +1 @@

|

||||

THANKS.md merge=union

|

||||

2

.gitignore

vendored

@@ -42,6 +42,7 @@ htmlcov/

|

||||

nosetests.xml

|

||||

coverage.xml

|

||||

*,cover

|

||||

.pytest_cache

|

||||

|

||||

# Translations

|

||||

*.mo

|

||||

@@ -68,6 +69,7 @@ db.sqlite3

|

||||

.idea

|

||||

|

||||

# Other stuff that doesn't belong

|

||||

.virtualenv

|

||||

virtualenv

|

||||

.vagrant

|

||||

docker-compose.yml

|

||||

|

||||

19

.travis.yml

@@ -1,18 +1,25 @@

|

||||

language: python

|

||||

|

||||

before_install:

|

||||

- sudo apt-get update -qq

|

||||

- sudo apt-get install -qq libpoppler-cpp-dev unpaper tesseract-ocr tesseract-ocr-eng

|

||||

|

||||

sudo: false

|

||||

|

||||

matrix:

|

||||

include:

|

||||

- python: 3.4

|

||||

env: TOXENV=py34

|

||||

- python: 3.5

|

||||

env: TOXENV=py35

|

||||

- python: 3.5

|

||||

env: TOXENV=pep8

|

||||

- python: 3.6

|

||||

|

||||

install:

|

||||

- pip install --requirement requirements.txt

|

||||

- pip install tox

|

||||

- pip install sphinx

|

||||

script:

|

||||

- cd src/

|

||||

- pytest --cov

|

||||

- pycodestyle

|

||||

- sphinx-build -b html ../docs ../docs/_build -W

|

||||

|

||||

script: tox -c src/tox.ini

|

||||

after_success:

|

||||

- coveralls

|

||||

|

||||

46

CODE_OF_CONDUCT.md

Normal file

@@ -0,0 +1,46 @@

|

||||

# Contributor Covenant Code of Conduct

|

||||

|

||||

## Our Pledge

|

||||

|

||||

In the interest of fostering an open and welcoming environment, we as contributors and maintainers pledge to making participation in our project and our community a harassment-free experience for everyone, regardless of age, body size, disability, ethnicity, gender identity and expression, level of experience, nationality, personal appearance, race, religion, or sexual identity and orientation.

|

||||

|

||||

## Our Standards

|

||||

|

||||

Examples of behavior that contributes to creating a positive environment include:

|

||||

|

||||

* Using welcoming and inclusive language

|

||||

* Being respectful of differing viewpoints and experiences

|

||||

* Gracefully accepting constructive criticism

|

||||

* Focusing on what is best for the community

|

||||

* Showing empathy towards other community members

|

||||

|

||||

Examples of unacceptable behavior by participants include:

|

||||

|

||||

* Unwelcome sexual attention or advances

|

||||

* Trolling, insulting/derogatory comments, and personal or political attacks

|

||||

* Public or private harassment

|

||||

* Publishing others' private information, such as a physical or electronic address, without explicit permission

|

||||

* Other conduct which could reasonably be considered inappropriate in a professional setting

|

||||

|

||||

## Our Responsibilities

|

||||

|

||||

Project maintainers are responsible for clarifying the standards of acceptable behavior and are expected to take appropriate and fair corrective action in response to any instances of unacceptable behavior.

|

||||

|

||||

Project maintainers have the right and responsibility to remove, edit, or reject comments, commits, code, wiki edits, issues, and other contributions that are not aligned to this Code of Conduct, or to ban temporarily or permanently any contributor for other behaviors that they deem inappropriate, threatening, offensive, or harmful.

|

||||

|

||||

## Scope

|

||||

|

||||

This Code of Conduct applies both within project spaces and in public spaces when an individual is representing the project or its community. Examples of representing a project or community include using an official project e-mail address, posting via an official social media account, or acting as an appointed representative at an online or offline event. Representation of a project may be further defined and clarified by project maintainers.

|

||||

|

||||

## Enforcement

|

||||

|

||||

Instances of abusive, harassing, or otherwise unacceptable behavior may be reported by contacting the project team at code@danielquinn.org. The project team will review and investigate all complaints, and will respond in a way that it deems appropriate to the circumstances. The project team is obligated to maintain confidentiality with regard to the reporter of an incident. Further details of specific enforcement policies may be posted separately.

|

||||

|

||||

Project maintainers who do not follow or enforce the Code of Conduct in good faith may face temporary or permanent repercussions as determined by other members of the project's leadership.

|

||||

|

||||

## Attribution

|

||||

|

||||

This Code of Conduct is adapted from the [Contributor Covenant][homepage], version 1.4, available at [http://contributor-covenant.org/version/1/4][version]

|

||||

|

||||

[homepage]: http://contributor-covenant.org

|

||||

[version]: http://contributor-covenant.org/version/1/4/

|

||||

70

Dockerfile

@@ -1,46 +1,46 @@

|

||||

FROM python:3.5

|

||||

MAINTAINER Pit Kleyersburg <pitkley@googlemail.com>

|

||||

FROM alpine:3.7

|

||||

|

||||

# Install dependencies

|

||||

RUN apt-get update \

|

||||

&& apt-get install -y --no-install-recommends \

|

||||

sudo \

|

||||

tesseract-ocr tesseract-ocr-eng imagemagick ghostscript unpaper \

|

||||

&& rm -rf /var/lib/apt/lists/*

|

||||

|

||||

# Install python dependencies

|

||||

RUN mkdir -p /usr/src/paperless

|

||||

WORKDIR /usr/src/paperless

|

||||

COPY requirements.txt /usr/src/paperless/

|

||||

RUN pip install --no-cache-dir -r requirements.txt

|

||||

LABEL maintainer="The Paperless Project https://github.com/danielquinn/paperless" \

|

||||

contributors="Guy Addadi <addadi@gmail.com>, Pit Kleyersburg <pitkley@googlemail.com>, \

|

||||

Sven Fischer <git-dev@linux4tw.de>"

|

||||

|

||||

# Copy application

|

||||

RUN mkdir -p /usr/src/paperless/src

|

||||

RUN mkdir -p /usr/src/paperless/data

|

||||

RUN mkdir -p /usr/src/paperless/media

|

||||

COPY requirements.txt /usr/src/paperless/

|

||||

COPY src/ /usr/src/paperless/src/

|

||||

COPY data/ /usr/src/paperless/data/

|

||||

COPY media/ /usr/src/paperless/media/

|

||||

|

||||

# Set consumption directory

|

||||

ENV PAPERLESS_CONSUMPTION_DIR /consume

|

||||

RUN mkdir -p $PAPERLESS_CONSUMPTION_DIR

|

||||

|

||||

# Migrate database

|

||||

WORKDIR /usr/src/paperless/src

|

||||

RUN ./manage.py migrate

|

||||

|

||||

# Create user

|

||||

RUN groupadd -g 1000 paperless \

|

||||

&& useradd -u 1000 -g 1000 -d /usr/src/paperless paperless \

|

||||

&& chown -Rh paperless:paperless /usr/src/paperless

|

||||

|

||||

# Setup entrypoint

|

||||

COPY scripts/docker-entrypoint.sh /sbin/docker-entrypoint.sh

|

||||

RUN chmod 755 /sbin/docker-entrypoint.sh

|

||||

|

||||

# Mount volumes

|

||||

VOLUME ["/usr/src/paperless/data", "/usr/src/paperless/media", "/consume"]

|

||||

# Set export and consumption directories

|

||||

ENV PAPERLESS_EXPORT_DIR=/export \

|

||||

PAPERLESS_CONSUMPTION_DIR=/consume

|

||||

|

||||

# Install dependencies

|

||||

RUN apk --no-cache --update add \

|

||||

python3 gnupg libmagic bash shadow curl \

|

||||

sudo poppler tesseract-ocr imagemagick ghostscript unpaper && \

|

||||

apk --no-cache add --virtual .build-dependencies \

|

||||

python3-dev poppler-dev gcc g++ musl-dev zlib-dev jpeg-dev && \

|

||||

# Install python dependencies

|

||||

python3 -m ensurepip && \

|

||||

rm -r /usr/lib/python*/ensurepip && \

|

||||

cd /usr/src/paperless && \

|

||||

pip3 install --no-cache-dir -r requirements.txt && \

|

||||

# Remove build dependencies

|

||||

apk del .build-dependencies && \

|

||||

# Create the consumption directory

|

||||

mkdir -p $PAPERLESS_CONSUMPTION_DIR && \

|

||||

# Create user

|

||||

addgroup -g 1000 paperless && \

|

||||

adduser -D -u 1000 -G paperless -h /usr/src/paperless paperless && \

|

||||

chown -Rh paperless:paperless /usr/src/paperless && \

|

||||

mkdir -p $PAPERLESS_EXPORT_DIR && \

|

||||

# Setup entrypoint

|

||||

chmod 755 /sbin/docker-entrypoint.sh

|

||||

|

||||

WORKDIR /usr/src/paperless/src

|

||||

# Mount volumes and set Entrypoint

|

||||

VOLUME ["/usr/src/paperless/data", "/usr/src/paperless/media", "/consume", "/export"]

|

||||

ENTRYPOINT ["/sbin/docker-entrypoint.sh"]

|

||||

CMD ["--help"]

|

||||

|

||||

|

||||

37

Pipfile

Normal file

@@ -0,0 +1,37 @@

|

||||

[[source]]

|

||||

url = "https://pypi.python.org/simple"

|

||||

verify_ssl = true

|

||||

name = "pypi"

|

||||

|

||||

[packages]

|

||||

django = "<2.0,>=1.11"

|

||||

pillow = "*"

|

||||

coveralls = "*"

|

||||

dateparser = "*"

|

||||

django-crispy-forms = "*"

|

||||

django-extensions = "*"

|

||||

django-filter = "*"

|

||||

django-flat-responsive = "*"

|

||||

djangorestframework = "*"

|

||||

factory-boy = "*"

|

||||

"flake8" = "*"

|

||||

filemagic = "*"

|

||||

fuzzywuzzy = {extras = ["speedup"], version = "==0.15.0"}

|

||||

gunicorn = "*"

|

||||

langdetect = "*"

|

||||

pdftotext = "*"

|

||||

pyocr = "*"

|

||||

python-dateutil = "*"

|

||||

python-dotenv = "*"

|

||||

python-gnupg = "*"

|

||||

pytz = "*"

|

||||

pycodestyle = "*"

|

||||

pytest = "*"

|

||||

pytest-cov = "*"

|

||||

pytest-django = "*"

|

||||

pytest-sugar = "*"

|

||||

pytest-env = "*"

|

||||

pytest-xdist = "*"

|

||||

|

||||

[dev-packages]

|

||||

ipython = "*"

|

||||

594

Pipfile.lock

generated

Normal file

@@ -0,0 +1,594 @@

|

||||

{

|

||||

"_meta": {

|

||||

"hash": {

|

||||

"sha256": "928fbb4c8952128aef7a2ed2707ce510d31d49df96cfc5f08959698edff6e67f"

|

||||

},

|

||||

"pipfile-spec": 6,

|

||||

"requires": {},

|

||||

"sources": [

|

||||

{

|

||||

"name": "pypi",

|

||||

"url": "https://pypi.python.org/simple",

|

||||

"verify_ssl": true

|

||||

}

|

||||

]

|

||||

},

|

||||

"default": {

|

||||

"apipkg": {

|

||||

"hashes": [

|

||||

"sha256:2e38399dbe842891fe85392601aab8f40a8f4cc5a9053c326de35a1cc0297ac6",

|

||||

"sha256:65d2aa68b28e7d31233bb2ba8eb31cda40e4671f8ac2d6b241e358c9652a74b9"

|

||||

],

|

||||

"version": "==1.4"

|

||||

},

|

||||

"attrs": {

|

||||

"hashes": [

|

||||

"sha256:1c7960ccfd6a005cd9f7ba884e6316b5e430a3f1a6c37c5f87d8b43f83b54ec9",

|

||||

"sha256:a17a9573a6f475c99b551c0e0a812707ddda1ec9653bed04c13841404ed6f450"

|

||||

],

|

||||

"version": "==17.4.0"

|

||||

},

|

||||

"certifi": {

|

||||

"hashes": [

|

||||

"sha256:14131608ad2fd56836d33a71ee60fa1c82bc9d2c8d98b7bdbc631fe1b3cd1296",

|

||||

"sha256:edbc3f203427eef571f79a7692bb160a2b0f7ccaa31953e99bd17e307cf63f7d"

|

||||

],

|

||||

"version": "==2018.1.18"

|

||||

},

|

||||

"chardet": {

|

||||

"hashes": [

|

||||

"sha256:84ab92ed1c4d4f16916e05906b6b75a6c0fb5db821cc65e70cbd64a3e2a5eaae",

|

||||

"sha256:fc323ffcaeaed0e0a02bf4d117757b98aed530d9ed4531e3e15460124c106691"

|

||||

],

|

||||

"version": "==3.0.4"

|

||||

},

|

||||

"coverage": {

|

||||

"hashes": [

|

||||

"sha256:03481e81d558d30d230bc12999e3edffe392d244349a90f4ef9b88425fac74ba",

|

||||

"sha256:0b136648de27201056c1869a6c0d4e23f464750fd9a9ba9750b8336a244429ed",

|

||||

"sha256:104ab3934abaf5be871a583541e8829d6c19ce7bde2923b2751e0d3ca44db60a",

|

||||

"sha256:15b111b6a0f46ee1a485414a52a7ad1d703bdf984e9ed3c288a4414d3871dcbd",

|

||||

"sha256:198626739a79b09fa0a2f06e083ffd12eb55449b5f8bfdbeed1df4910b2ca640",

|

||||

"sha256:1c383d2ef13ade2acc636556fd544dba6e14fa30755f26812f54300e401f98f2",

|

||||

"sha256:28b2191e7283f4f3568962e373b47ef7f0392993bb6660d079c62bd50fe9d162",

|

||||

"sha256:2eb564bbf7816a9d68dd3369a510be3327f1c618d2357fa6b1216994c2e3d508",

|

||||

"sha256:337ded681dd2ef9ca04ef5d93cfc87e52e09db2594c296b4a0a3662cb1b41249",

|

||||

"sha256:3a2184c6d797a125dca8367878d3b9a178b6fdd05fdc2d35d758c3006a1cd694",

|

||||

"sha256:3c79a6f7b95751cdebcd9037e4d06f8d5a9b60e4ed0cd231342aa8ad7124882a",

|

||||

"sha256:3d72c20bd105022d29b14a7d628462ebdc61de2f303322c0212a054352f3b287",

|

||||

"sha256:3eb42bf89a6be7deb64116dd1cc4b08171734d721e7a7e57ad64cc4ef29ed2f1",

|

||||

"sha256:4635a184d0bbe537aa185a34193898eee409332a8ccb27eea36f262566585000",

|

||||

"sha256:56e448f051a201c5ebbaa86a5efd0ca90d327204d8b059ab25ad0f35fbfd79f1",

|

||||

"sha256:5a13ea7911ff5e1796b6d5e4fbbf6952381a611209b736d48e675c2756f3f74e",

|

||||

"sha256:69bf008a06b76619d3c3f3b1983f5145c75a305a0fea513aca094cae5c40a8f5",

|

||||

"sha256:6bc583dc18d5979dc0f6cec26a8603129de0304d5ae1f17e57a12834e7235062",

|

||||

"sha256:701cd6093d63e6b8ad7009d8a92425428bc4d6e7ab8d75efbb665c806c1d79ba",

|

||||

"sha256:7608a3dd5d73cb06c531b8925e0ef8d3de31fed2544a7de6c63960a1e73ea4bc",

|

||||

"sha256:76ecd006d1d8f739430ec50cc872889af1f9c1b6b8f48e29941814b09b0fd3cc",

|

||||

"sha256:7aa36d2b844a3e4a4b356708d79fd2c260281a7390d678a10b91ca595ddc9e99",

|

||||

"sha256:7d3f553904b0c5c016d1dad058a7554c7ac4c91a789fca496e7d8347ad040653",

|

||||

"sha256:7e1fe19bd6dce69d9fd159d8e4a80a8f52101380d5d3a4d374b6d3eae0e5de9c",

|

||||

"sha256:8c3cb8c35ec4d9506979b4cf90ee9918bc2e49f84189d9bf5c36c0c1119c6558",

|

||||

"sha256:9d6dd10d49e01571bf6e147d3b505141ffc093a06756c60b053a859cb2128b1f",

|

||||

"sha256:9e112fcbe0148a6fa4f0a02e8d58e94470fc6cb82a5481618fea901699bf34c4",

|

||||

"sha256:ac4fef68da01116a5c117eba4dd46f2e06847a497de5ed1d64bb99a5fda1ef91",

|

||||

"sha256:b8815995e050764c8610dbc82641807d196927c3dbed207f0a079833ffcf588d",

|

||||

"sha256:be6cfcd8053d13f5f5eeb284aa8a814220c3da1b0078fa859011c7fffd86dab9",

|

||||

"sha256:c1bb572fab8208c400adaf06a8133ac0712179a334c09224fb11393e920abcdd",

|

||||

"sha256:de4418dadaa1c01d497e539210cb6baa015965526ff5afc078c57ca69160108d",

|

||||

"sha256:e05cb4d9aad6233d67e0541caa7e511fa4047ed7750ec2510d466e806e0255d6",

|

||||

"sha256:e4d96c07229f58cb686120f168276e434660e4358cc9cf3b0464210b04913e77",

|

||||

"sha256:f3f501f345f24383c0000395b26b726e46758b71393267aeae0bd36f8b3ade80",

|

||||

"sha256:f8a923a85cb099422ad5a2e345fe877bbc89a8a8b23235824a93488150e45f6e"

|

||||

],

|

||||

"version": "==4.5.1"

|

||||

},

|

||||

"coveralls": {

|

||||

"hashes": [

|

||||

"sha256:32569a43c9dbc13fa8199247580a4ab182ef439f51f65bb7f8316d377a1340e8",

|

||||

"sha256:664794748d2e5673e347ec476159a9d87f43e0d2d44950e98ed0e27b98da8346"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==1.3.0"

|

||||

},

|

||||

"dateparser": {

|

||||

"hashes": [

|

||||

"sha256:940828183c937bcec530753211b70f673c0a9aab831e43273489b310538dff86",

|

||||

"sha256:b452ef8b36cd78ae86a50721794bc674aa3994e19b570f7ba92810f4e0a2ae03"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==0.7.0"

|

||||

},

|

||||

"django": {

|

||||

"hashes": [

|

||||

"sha256:056fe5b9e1f8f7fed9bb392919d64f6b33b3a71cfb0f170a90ee277a6ed32bc2",

|

||||

"sha256:4d398c7b02761e234bbde490aea13ea94cb539ceeb72805b72303f348682f2eb"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==1.11.12"

|

||||

},

|

||||

"django-crispy-forms": {

|

||||

"hashes": [

|

||||

"sha256:5952bab971110d0b86c278132dae0aa095beee8f723e625c3d3fa28888f1675f",

|

||||

"sha256:705ededc554ad8736157c666681165fe22ead2dec0d5446d65fc9dd976a5a876"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==1.7.2"

|

||||

},

|

||||

"django-extensions": {

|

||||

"hashes": [

|

||||

"sha256:37a543af370ee3b0721ff50442d33c357dd083e6ea06c5b94a199283b6f9e361",

|

||||

"sha256:bc9f2946c117bb2f49e5e0633eba783787790ae810ea112fe7fd82fa64de2ff1"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==2.0.6"

|

||||

},

|

||||

"django-filter": {

|

||||

"hashes": [

|

||||

"sha256:ea204242ea83790e1512c9d0d8255002a652a6f4986e93cee664f28955ba0c22",

|

||||

"sha256:ec0ef1ba23ef95b1620f5d481334413700fb33f45cd76d56a63f4b0b1d76976a"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==1.1.0"

|

||||

},

|

||||

"django-flat-responsive": {

|

||||

"hashes": [

|

||||

"sha256:451caa2700c541b52fb7ce2d34d3d8dee9e980cf29f5463bc8a8c6256a1a6474"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==2.0"

|

||||

},

|

||||

"djangorestframework": {

|

||||

"hashes": [

|

||||

"sha256:b6714c3e4b0f8d524f193c91ecf5f5450092c2145439ac2769711f7eba89a9d9",

|

||||

"sha256:c375e4f95a3a64fccac412e36fb42ba36881e52313ec021ef410b40f67cddca4"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==3.8.2"

|

||||

},

|

||||

"docopt": {

|

||||

"hashes": [

|

||||

"sha256:49b3a825280bd66b3aa83585ef59c4a8c82f2c8a522dbe754a8bc8d08c85c491"

|

||||

],

|

||||

"version": "==0.6.2"

|

||||

},

|

||||

"execnet": {

|

||||

"hashes": [

|

||||

"sha256:a7a84d5fa07a089186a329528f127c9d73b9de57f1a1131b82bb5320ee651f6a",

|

||||

"sha256:fc155a6b553c66c838d1a22dba1dc9f5f505c43285a878c6f74a79c024750b83"

|

||||

],

|

||||

"version": "==1.5.0"

|

||||

},

|

||||

"factory-boy": {

|

||||

"hashes": [

|

||||

"sha256:bd5a096d0f102d79b6c78cef1c8c0b650f2e1a3ecba351c735c6d2df8dabd29c",

|

||||

"sha256:be2abc8092294e4097935a29b4e37f5b9ed3e4205e2e32df215c0315b625995e"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==2.10.0"

|

||||

},

|

||||

"faker": {

|

||||

"hashes": [

|

||||

"sha256:226d8fa67a8cf8b4007aab721f67639f130e9cfdc53a7095a2290ebb07a65c71",

|

||||

"sha256:48fed4b4a191e2b42ad20c14115f1c6d36d338b80192075d7573f0f42d7fb321"

|

||||

],

|

||||

"version": "==0.8.13"

|

||||

},

|

||||

"filemagic": {

|

||||

"hashes": [

|

||||

"sha256:e684359ef40820fe406f0ebc5bf8a78f89717bdb7fed688af68082d991d6dbf3"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==1.6"

|

||||

},

|

||||

"flake8": {

|

||||

"hashes": [

|

||||

"sha256:7253265f7abd8b313e3892944044a365e3f4ac3fcdcfb4298f55ee9ddf188ba0",

|

||||

"sha256:c7841163e2b576d435799169b78703ad6ac1bbb0f199994fc05f700b2a90ea37"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==3.5.0"

|

||||

},

|

||||

"fuzzywuzzy": {

|

||||

"hashes": [

|

||||

"sha256:3759bc6859daa0eecef8c82b45404bdac20c23f23136cf4c18b46b426bbc418f",

|

||||

"sha256:5b36957ccf836e700f4468324fa80ba208990385392e217be077d5cd738ae602"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==0.15.0"

|

||||

},

|

||||

"gunicorn": {

|

||||

"hashes": [

|

||||

"sha256:75af03c99389535f218cc596c7de74df4763803f7b63eb09d77e92b3956b36c6",

|

||||

"sha256:eee1169f0ca667be05db3351a0960765620dad53f53434262ff8901b68a1b622"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==19.7.1"

|

||||

},

|

||||

"idna": {

|

||||

"hashes": [

|

||||

"sha256:2c6a5de3089009e3da7c5dde64a141dbc8551d5b7f6cf4ed7c2568d0cc520a8f",

|

||||

"sha256:8c7309c718f94b3a625cb648ace320157ad16ff131ae0af362c9f21b80ef6ec4"

|

||||

],

|

||||

"version": "==2.6"

|

||||

},

|

||||

"langdetect": {

|

||||

"hashes": [

|

||||

"sha256:91a170d5f0ade380db809b3ba67f08e95fe6c6c8641f96d67a51ff7e98a9bf30"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==1.0.7"

|

||||

},

|

||||

"mccabe": {

|

||||

"hashes": [

|

||||

"sha256:ab8a6258860da4b6677da4bd2fe5dc2c659cff31b3ee4f7f5d64e79735b80d42",

|

||||

"sha256:dd8d182285a0fe56bace7f45b5e7d1a6ebcbf524e8f3bd87eb0f125271b8831f"

|

||||

],

|

||||

"version": "==0.6.1"

|

||||

},

|

||||

"more-itertools": {

|

||||

"hashes": [

|

||||

"sha256:0dd8f72eeab0d2c3bd489025bb2f6a1b8342f9b198f6fc37b52d15cfa4531fea",

|

||||

"sha256:11a625025954c20145b37ff6309cd54e39ca94f72f6bb9576d1195db6fa2442e",

|

||||

"sha256:c9ce7eccdcb901a2c75d326ea134e0886abfbea5f93e91cc95de9507c0816c44"

|

||||

],

|

||||

"version": "==4.1.0"

|

||||

},

|

||||

"pdftotext": {

|

||||

"hashes": [

|

||||

"sha256:0b82a9fd255a3f2bf5c861cf9e3174d3c4223e1e441bb060c611dcb4e65c6cb8"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==2.0.2"

|

||||

},

|

||||

"pillow": {

|

||||

"hashes": [

|

||||

"sha256:00633bc2ec40313f4daf351855e506d296ec3c553f21b66720d0f1225ca84c6f",

|

||||

"sha256:03514478db61b034fc5d38b9bf060f994e5916776e93f02e59732a8270069c61",

|

||||

"sha256:040144ba422216aecf7577484865ade90e1a475f867301c48bf9fbd7579efd76",

|

||||

"sha256:16246261ff22368e5e32ad74d5ef40403ab6895171a7fc6d34f6c17cfc0f1943",

|

||||

"sha256:1cb38df69362af35c14d4a50123b63c7ff18ec9a6d4d5da629a6f19d05e16ba8",

|

||||

"sha256:2400e122f7b21d9801798207e424cbe1f716cee7314cd0c8963fdb6fc564b5fb",

|

||||

"sha256:2ee6364b270b56a49e8b8a51488e847ab130adc1220c171bed6818c0d4742455",

|

||||

"sha256:3b4560c3891b05022c464b09121bd507c477505a4e19d703e1027a3a7c68d896",

|

||||

"sha256:41374a6afb3f44794410dab54a0d7175e6209a5a02d407119c81083f1a4c1841",

|

||||

"sha256:438a3faf5f702c8d0f80b9f9f9b8382cfa048ca6a0d64ef71b86b563b0ee0359",

|

||||

"sha256:472a124c640bde4d5468f6991c9fa7e30b723d84ac4195a77c6ab6aea30f2b9c",

|

||||

"sha256:4d32c8e3623a61d6e29ccd024066cd1ba556555abfb4cd714155020e00107e3f",

|

||||

"sha256:4d8077fd649ac40a5c4165f2c22fa2a4ad18c668e271ecb2f9d849d1017a9313",

|

||||

"sha256:62ec7ae98357fcd46002c110bb7cad15fce532776f0cbe7ca1d44c49b837d49d",

|

||||

"sha256:6c7cab6a05351cf61e469937c49dbf3cdf5ffb3eeac71f8d22dc9be3507598d8",

|

||||

"sha256:6eca36905444c4b91fe61f1b9933a47a30480738a1dd26501ff67d94fc2bc112",

|

||||

"sha256:74e2ebfd19c16c28ad43b8a28ff73b904ed382ea4875188838541751986e8c9a",

|

||||

"sha256:7673e7473a13107059377c96c563aa36f73184c29d2926882e0a0210b779a1e7",

|

||||

"sha256:81762cf5fca9a82b53b7b2d0e6b420e0f3b06167b97678c81d00470daa622d58",

|

||||

"sha256:8554bbeb4218d9cfb1917c69e6f2d2ad0be9b18a775d2162547edf992e1f5f1f",

|

||||

"sha256:9b66e968da9c4393f5795285528bc862c7b97b91251f31a08004a3c626d18114",

|

||||

"sha256:a00edb2dec0035e98ac3ec768086f0b06dfabb4ad308592ede364ef573692f55",

|

||||

"sha256:b48401752496757e95304a46213c3155bc911ac884bed2e9b275ce1c1df3e293",

|

||||

"sha256:b6cf18f9e653a8077522bb3aa753a776b117e3e0cc872c25811cfdf1459491c2",

|

||||

"sha256:bb8adab1877e9213385cbb1adc297ed8337e01872c42a30cfaa66ff8c422779c",

|

||||

"sha256:c8a4b39ba380b57a31a4b5449a9d257b1302d8bc4799767e645dcee25725efe1",

|

||||

"sha256:cee9bc75bff455d317b6947081df0824a8f118de2786dc3d74a3503fd631f4ef",

|

||||

"sha256:d0dc1313dff48af64517cbbd85e046d6b477fbe5e9d69712801f024dcb08c62b",

|

||||

"sha256:d5bf527ed83617edd1855a5c923eeeaf68bcb9ac0ceb28e3f19b575b3a424984",

|

||||

"sha256:df5863a21f91de5ecdf7d32a32f406dd9867ebb35d41033b8bd9607a21887599",

|

||||

"sha256:e39142332541ed2884c257495504858b22c078a5d781059b07aba4c3a80d7551",

|

||||

"sha256:e52e8f675ba0b2b417fa98579e7286a41a8e23871f17f4793772f5aa884fea79",

|

||||

"sha256:e6dd55d5d94b9e36929325dd0c9ab85bfde84a5fc35947c334c32af1af668944",

|

||||

"sha256:e87cc1acbebf263f308a8494272c2d42016aa33c32bf14d209c81e1f65e11868",

|

||||

"sha256:ea0091cd4100519cedfeea2c659f52291f535ac6725e2368bcf59e874f270efa",

|

||||

"sha256:eeb247f4f4d962942b3b555530b0c63b77473c7bfe475e51c6b75b7344b49ce3",

|

||||

"sha256:f0d4433adce6075efd24fc0285135248b0b50f5a58129c7e552030e04fe45c7f",

|

||||

"sha256:f1f3bd92f8e12dc22884935a73c9f94c4d9bd0d34410c456540713d6b7832b8c",

|

||||

"sha256:f42a87cbf50e905f49f053c0b1fb86c911c730624022bf44c8857244fc4cdaca",

|

||||

"sha256:f5f302db65e2e0ae96e26670818157640d3ca83a3054c290eff3631598dcf819",

|

||||

"sha256:f7634d534662bbb08976db801ba27a112aee23e597eeaf09267b4575341e45bf",

|

||||

"sha256:fdd374c02e8bb2d6468a85be50ea66e1c4ef9e809974c30d8576728473a6ed03",

|

||||

"sha256:fe6931db24716a0845bd8c8915bd096b77c2a7043e6fc59ae9ca364fe816f08b"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==5.1.0"

|

||||

},

|

||||

"pluggy": {

|

||||

"hashes": [

|

||||

"sha256:714306e9b9a7b24ee4c1e3ff6463d7f652cdd30f4693121b31572e2fe1fdaea3",

|

||||

"sha256:7f8ae7f5bdf75671a718d2daf0a64b7885f74510bcd98b1a0bb420eb9a9d0cff",

|

||||

"sha256:d345c8fe681115900d6da8d048ba67c25df42973bda370783cd58826442dcd7c",

|

||||

"sha256:e160a7fcf25762bb60efc7e171d4497ff1d8d2d75a3d0df7a21b76821ecbf5c5"

|

||||

],

|

||||

"version": "==0.6.0"

|

||||

},

|

||||

"py": {

|

||||

"hashes": [

|

||||

"sha256:29c9fab495d7528e80ba1e343b958684f4ace687327e6f789a94bf3d1915f881",

|

||||

"sha256:983f77f3331356039fdd792e9220b7b8ee1aa6bd2b25f567a963ff1de5a64f6a"

|

||||

],

|

||||

"version": "==1.5.3"

|

||||

},

|

||||

"pycodestyle": {

|

||||

"hashes": [

|

||||

"sha256:1ec08a51c901dfe44921576ed6e4c1f5b7ecbad403f871397feedb5eb8e4fa14",

|

||||

"sha256:5ff2fbcbab997895ba9ead77e1b38b3ebc2e5c3b8a6194ef918666e4c790a00e",

|

||||

"sha256:682256a5b318149ca0d2a9185d365d8864a768a28db66a84a2ea946bcc426766",

|

||||

"sha256:6c4245ade1edfad79c3446fadfc96b0de2759662dc29d07d80a6f27ad1ca6ba9"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==2.3.1"

|

||||

},

|

||||

"pyflakes": {

|

||||

"hashes": [

|

||||

"sha256:08bd6a50edf8cffa9fa09a463063c425ecaaf10d1eb0335a7e8b1401aef89e6f",

|

||||

"sha256:8d616a382f243dbf19b54743f280b80198be0bca3a5396f1d2e1fca6223e8805"

|

||||

],

|

||||

"version": "==1.6.0"

|

||||

},

|

||||

"pyocr": {

|

||||

"hashes": [

|

||||

"sha256:9ee8b5f38dd966ca531115fc5fe4715f7fa8961a9f14cd5109c2d938c17a2043"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==0.5.1"

|

||||

},

|

||||

"pytest": {

|

||||

"hashes": [

|

||||

"sha256:6266f87ab64692112e5477eba395cfedda53b1933ccd29478e671e73b420c19c",

|

||||

"sha256:fae491d1874f199537fd5872b5e1f0e74a009b979df9d53d1553fd03da1703e1"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==3.5.0"

|

||||

},

|

||||

"pytest-cov": {

|

||||

"hashes": [

|

||||

"sha256:03aa752cf11db41d281ea1d807d954c4eda35cfa1b21d6971966cc041bbf6e2d",

|

||||

"sha256:890fe5565400902b0c78b5357004aab1c814115894f4f21370e2433256a3eeec"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==2.5.1"

|

||||

},

|

||||

"pytest-django": {

|

||||

"hashes": [

|

||||

"sha256:534505e0261cc566279032d9d887f844235342806fd63a6925689670fa1b29d7",

|

||||

"sha256:7501942093db2250a32a4e36826edfc542347bb9b26c78ed0649cdcfd49e5789"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==3.2.1"

|

||||

},

|

||||

"pytest-env": {

|

||||

"hashes": [

|

||||

"sha256:7e94956aef7f2764f3c147d216ce066bf6c42948bb9e293169b1b1c880a580c2"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==0.6.2"

|

||||

},

|

||||

"pytest-forked": {

|

||||

"hashes": [

|

||||

"sha256:e4500cd0509ec4a26535f7d4112a8cc0f17d3a41c29ffd4eab479d2a55b30805",

|

||||

"sha256:f275cb48a73fc61a6710726348e1da6d68a978f0ec0c54ece5a5fae5977e5a08"

|

||||

],

|

||||

"version": "==0.2"

|

||||

},

|

||||

"pytest-sugar": {

|

||||

"hashes": [

|

||||

"sha256:ab8cc42faf121344a4e9b13f39a51257f26f410e416c52ea11078cdd00d98a2c"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==0.9.1"

|

||||

},

|

||||

"pytest-xdist": {

|

||||

"hashes": [

|

||||

"sha256:be2662264b035920ba740ed6efb1c816a83c8a22253df7766d129f6a7bfdbd35",

|

||||

"sha256:e8f5744acc270b3e7d915bdb4d5f471670f049b6fbd163d4cbd52203b075d30f"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==1.22.2"

|

||||

},

|

||||

"python-dateutil": {

|

||||

"hashes": [

|

||||

"sha256:3220490fb9741e2342e1cf29a503394fdac874bc39568288717ee67047ff29df",

|

||||

"sha256:9d8074be4c993fbe4947878ce593052f71dac82932a677d49194d8ce9778002e"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==2.7.2"

|

||||

},

|

||||

"python-dotenv": {

|

||||

"hashes": [

|

||||

"sha256:4965ed170bf51c347a89820e8050655e9c25db3837db6602e906b6d850fad85c",

|

||||

"sha256:509736185257111613009974e666568a1b031b028b61b500ef1ab4ee780089d5"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==0.8.2"

|

||||

},

|

||||

"python-gnupg": {

|

||||

"hashes": [

|

||||

"sha256:38f18712b7cfdd0d769bc88a21e90138154b9be2cbffb1e7d28bc37ee73a1c47",

|

||||

"sha256:5a54a6dd25bf78d3758dd7a1864f4efd122f9ca9402101d90e3ec4483ceafb73"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==0.4.2"

|

||||

},

|

||||

"python-levenshtein": {

|

||||

"hashes": [

|

||||

"sha256:033a11de5e3d19ea25c9302d11224e1a1898fe5abd23c61c7c360c25195e3eb1"

|

||||

],

|

||||

"version": "==0.12.0"

|

||||

},

|

||||

"pytz": {

|

||||

"hashes": [

|

||||

"sha256:65ae0c8101309c45772196b21b74c46b2e5d11b6275c45d251b150d5da334555",

|

||||

"sha256:c06425302f2cf668f1bba7a0a03f3c1d34d4ebeef2c72003da308b3947c7f749"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==2018.4"

|

||||

},

|

||||

"regex": {

|

||||

"hashes": [

|

||||

"sha256:1b428a296531ea1642a7da48562746309c5c06471a97bd0c02dd6a82e9cecee8",

|

||||

"sha256:27d72bb42dffb32516c28d218bb054ce128afd3e18464f30837166346758af67",

|

||||

"sha256:32cf4743debee9ea12d3626ee21eae83052763740e04086304e7a74778bf58c9",

|

||||

"sha256:32f6408dbca35040bc65f9f4ae1444d5546411fde989cb71443a182dd643305e",

|

||||

"sha256:333687d9a44738c486735955993f83bd22061a416c48f5a5f9e765e90cf1b0c9",

|

||||

"sha256:35eeccf17af3b017a54d754e160af597036435c58eceae60f1dd1364ae1250c7",

|

||||

"sha256:361a1fd703a35580a4714ec28d85e29780081a4c399a99bbfb2aee695d72aedb",

|

||||

"sha256:494bed6396a20d3aa6376bdf2d3fbb1005b8f4339558d8ac7b53256755f80303",

|

||||

"sha256:5b9c0ddd5b4afa08c9074170a2ea9b34ea296e32aeea522faaaaeeeb2fe0af2e",

|

||||

"sha256:a50532f61b23d4ab9d216a6214f359dd05c911c1a1ad20986b6738a782926c1a",

|

||||

"sha256:a9243d7b359b72c681a2c32eaa7ace8d346b7e8ce09d172a683acf6853161d9c",

|

||||

"sha256:b44624a38d07d3c954c84ad302c29f7930f4bf01443beef5589e9157b14e2a29",

|

||||

"sha256:be42a601aaaeb7a317f818490a39d153952a97c40c6e9beeb2a1103616405348",

|

||||

"sha256:eee4d94b1a626490fc8170ffd788883f8c641b576e11ba9b4a29c9f6623371e0",

|

||||

"sha256:f69d1201a4750f763971ea8364ed95ee888fc128968b39d38883a72a4d005895"

|

||||

],

|

||||

"version": "==2018.2.21"

|

||||

},

|

||||

"requests": {

|

||||

"hashes": [

|

||||

"sha256:6a1b267aa90cac58ac3a765d067950e7dbbf75b1da07e895d1f594193a40a38b",

|

||||

"sha256:9c443e7324ba5b85070c4a818ade28bfabedf16ea10206da1132edaa6dda237e"

|

||||

],

|

||||

"version": "==2.18.4"

|

||||

},

|

||||

"six": {

|

||||

"hashes": [

|

||||

"sha256:70e8a77beed4562e7f14fe23a786b54f6296e34344c23bc42f07b15018ff98e9",

|

||||

"sha256:832dc0e10feb1aa2c68dcc57dbb658f1c7e65b9b61af69048abc87a2db00a0eb"

|

||||

],

|

||||

"version": "==1.11.0"

|

||||

},

|

||||

"termcolor": {

|

||||

"hashes": [

|

||||

"sha256:1d6d69ce66211143803fbc56652b41d73b4a400a2891d7bf7a1cdf4c02de613b"

|

||||

],

|

||||

"version": "==1.1.0"

|

||||

},

|

||||

"text-unidecode": {

|

||||

"hashes": [

|

||||

"sha256:5a1375bb2ba7968740508ae38d92e1f889a0832913cb1c447d5e2046061a396d",

|

||||

"sha256:801e38bd550b943563660a91de8d4b6fa5df60a542be9093f7abf819f86050cc"

|

||||

],

|

||||

"version": "==1.2"

|

||||

},

|

||||

"tzlocal": {

|

||||

"hashes": [

|

||||

"sha256:4ebeb848845ac898da6519b9b31879cf13b6626f7184c496037b818e238f2c4e"

|

||||

],

|

||||

"version": "==1.5.1"

|

||||

},

|

||||

"urllib3": {

|

||||

"hashes": [

|

||||

"sha256:06330f386d6e4b195fbfc736b297f58c5a892e4440e54d294d7004e3a9bbea1b",

|

||||

"sha256:cc44da8e1145637334317feebd728bd869a35285b93cbb4cca2577da7e62db4f"

|

||||

],

|

||||

"version": "==1.22"

|

||||

}

|

||||

},

|

||||

"develop": {

|

||||

"backcall": {

|

||||

"hashes": [

|

||||

"sha256:38ecd85be2c1e78f77fd91700c76e14667dc21e2713b63876c0eb901196e01e4",

|

||||

"sha256:bbbf4b1e5cd2bdb08f915895b51081c041bac22394fdfcfdfbe9f14b77c08bf2"

|

||||

],

|

||||

"version": "==0.1.0"

|

||||

},

|

||||

"decorator": {

|

||||

"hashes": [

|

||||

"sha256:2c51dff8ef3c447388fe5e4453d24a2bf128d3a4c32af3fabef1f01c6851ab82",

|

||||

"sha256:c39efa13fbdeb4506c476c9b3babf6a718da943dab7811c206005a4a956c080c"

|

||||

],

|

||||

"version": "==4.3.0"

|

||||

},

|

||||

"ipython": {

|

||||

"hashes": [

|

||||

"sha256:85882f97d75122ff8cdfe129215a408085a26039527110c8d4a2b8a5e45b7639",

|

||||

"sha256:a6ac981381b3f5f604b37a293369963485200e3639fb0404fa76092383c10c41"

|

||||

],

|

||||

"index": "pypi",

|

||||

"version": "==6.3.1"

|

||||

},

|

||||

"ipython-genutils": {

|

||||

"hashes": [

|

||||

"sha256:72dd37233799e619666c9f639a9da83c34013a73e8bbc79a7a6348d93c61fab8",

|

||||

"sha256:eb2e116e75ecef9d4d228fdc66af54269afa26ab4463042e33785b887c628ba8"

|

||||

],

|

||||

"version": "==0.2.0"

|

||||

},

|

||||

"jedi": {

|

||||

"hashes": [

|

||||

"sha256:1972f694c6bc66a2fac8718299e2ab73011d653a6d8059790c3476d2353b99ad",

|

||||

"sha256:5861f6dc0c16e024cbb0044999f9cf8013b292c05f287df06d3d991a87a4eb89"

|

||||

],

|

||||

"version": "==0.12.0"

|

||||

},

|

||||

"parso": {

|

||||

"hashes": [

|

||||

"sha256:62bd6bf7f04ab5c817704ff513ef175328676471bdef3629d4bdd46626f75551",

|

||||

"sha256:a75a304d7090d2c67bd298091c14ef9d3d560e3c53de1c239617889f61d1d307"

|

||||

],

|

||||

"version": "==0.2.0"

|

||||

},

|

||||

"pexpect": {

|

||||

"hashes": [

|

||||

"sha256:9783f4644a3ef8528a6f20374eeb434431a650c797ca6d8df0d81e30fffdfa24",

|

||||

"sha256:9f8eb3277716a01faafaba553d629d3d60a1a624c7cf45daa600d2148c30020c"

|

||||

],

|

||||

"markers": "sys_platform != 'win32'",

|

||||

"version": "==4.5.0"

|

||||

},

|

||||

"pickleshare": {

|

||||

"hashes": [

|

||||

"sha256:84a9257227dfdd6fe1b4be1319096c20eb85ff1e82c7932f36efccfe1b09737b",

|

||||

"sha256:c9a2541f25aeabc070f12f452e1f2a8eae2abd51e1cd19e8430402bdf4c1d8b5"

|

||||

],

|

||||

"version": "==0.7.4"

|

||||

},

|

||||

"prompt-toolkit": {

|

||||

"hashes": [

|

||||

"sha256:1df952620eccb399c53ebb359cc7d9a8d3a9538cb34c5a1344bdbeb29fbcc381",

|

||||

"sha256:3f473ae040ddaa52b52f97f6b4a493cfa9f5920c255a12dc56a7d34397a398a4",

|

||||

"sha256:858588f1983ca497f1cf4ffde01d978a3ea02b01c8a26a8bbc5cd2e66d816917"

|

||||

],

|

||||

"version": "==1.0.15"

|

||||

},

|

||||

"ptyprocess": {

|

||||

"hashes": [

|

||||

"sha256:e64193f0047ad603b71f202332ab5527c5e52aa7c8b609704fc28c0dc20c4365",

|

||||

"sha256:e8c43b5eee76b2083a9badde89fd1bbce6c8942d1045146e100b7b5e014f4f1a"

|

||||

],

|

||||

"version": "==0.5.2"

|

||||

},

|

||||

"pygments": {

|

||||

"hashes": [

|

||||

"sha256:78f3f434bcc5d6ee09020f92ba487f95ba50f1e3ef83ae96b9d5ffa1bab25c5d",

|

||||

"sha256:dbae1046def0efb574852fab9e90209b23f556367b5a320c0bcb871c77c3e8cc"

|

||||

],

|

||||

"version": "==2.2.0"

|

||||

},

|

||||

"simplegeneric": {

|

||||

"hashes": [

|

||||

"sha256:dc972e06094b9af5b855b3df4a646395e43d1c9d0d39ed345b7393560d0b9173"

|

||||

],

|

||||

"version": "==0.8.1"

|

||||

},

|

||||

"six": {

|

||||

"hashes": [

|

||||

"sha256:70e8a77beed4562e7f14fe23a786b54f6296e34344c23bc42f07b15018ff98e9",

|

||||

"sha256:832dc0e10feb1aa2c68dcc57dbb658f1c7e65b9b61af69048abc87a2db00a0eb"

|

||||

],

|

||||

"version": "==1.11.0"

|

||||

},

|

||||

"traitlets": {

|

||||

"hashes": [

|

||||

"sha256:9c4bd2d267b7153df9152698efb1050a5d84982d3384a37b2c1f7723ba3e7835",

|

||||

"sha256:c6cb5e6f57c5a9bdaa40fa71ce7b4af30298fbab9ece9815b5d995ab6217c7d9"

|

||||

],

|

||||

"version": "==4.3.2"

|

||||

},

|

||||

"wcwidth": {

|

||||

"hashes": [

|

||||

"sha256:3df37372226d6e63e1b1e1eda15c594bca98a22d33a23832a90998faa96bc65e",

|

||||

"sha256:f4ebe71925af7b40a864553f761ed559b43544f8f71746c2d756c7fe788ade7c"

|

||||

],

|

||||

"version": "==0.1.7"

|

||||

}

|

||||

}

|

||||

}

|

||||

80

README-el.md

Normal file

@@ -0,0 +1,80 @@

|

||||

*[English](README.md)*

|

||||

|

||||

# Paperless

|

||||

|

||||

[](https://paperless.readthedocs.org/) [](https://gitter.im/danielquinn/paperless) [](https://travis-ci.org/danielquinn/paperless) [](https://coveralls.io/github/danielquinn/paperless?branch=master) [](https://github.com/danielquinn/paperless/blob/master/THANKS.md)

|

||||

|

||||

Ευρετήριο και αρχείο για όλα σας τα σκαναρισμένα έγγραφα

|

||||

|

||||

Μισώ το χαρτί. Πέρα από τα περιβαλλοντικά ζητήματα, είναι ο εφιάλτης ενός τεχνικού.

|

||||

|

||||

* Δεν υπάρχει η δυνατότητα της αναζήτησης

|

||||

* Πιάνουν πολύ χώρο

|

||||

* Τα αντίγραφα ασφαλείας σημάινουν περισσότερο χαρτί

|

||||

|

||||

Τους τελευταίους μήνες μου έχει τύχει αρκετές φορές να μην μπορώ να βρω το σωστό έγγραφο. Κάποιες φορές ανακύκλωνα το έγγραφο που χρειαζόμουν (ποιος κρατάει τους λογαριασμούς του νερού για 2 χρόνια;;;) και κάποιες φορές απλά το έχανα ... επειδή έτσι είναι τα χαρτιά. Το έκανα αυτό για να κάνω την ζωή μου πιο εύκολη

|

||||

|

||||

|

||||

## Πως δουλεύει

|

||||

|

||||

Η εφαρμογή Paperless δεν ελέγχει το scanner σας, αλλά σας βοηθάει με τα αποτελέσματα του scanner σας.

|

||||

|

||||

1. Αγοράστε ένα scanner με πρόσβαση στο δίκτυο σας. Αν χρειάζεστε έμπνευση, δείτε την σελίδα με τα [προτεινόμενα scanner](https://paperless.readthedocs.io/en/latest/scanners.html).

|

||||

2. Κάντε την ρύθμιση "scan to FTP" ή κάτι παρόμοιο. Θα μπορεί να αποθηκεύει τις σκαναρισμένες εικόνες σε έναν server χωρίς να χρειάζεται να κάνετε κάτι. Φυσικά άμα το scanner σας δεν μπορεί να αποθηκεύσει κάπου τις εικόνες σας αυτόματα μπορείτε να το κάνετε χειροκίνητα. Το Paperless δεν ενδιαφέρεται πως καταλήγουν κάπου τα αρχεία.

|

||||

3. Να έχετε τον server που τρέχει το OCR script του Paperless να έχει ευρετήριο στην τοπική βάση δεδομένων.

|

||||

4. Χρησιμοποιήστε το web frontend για να επιλέξετε βάση δεδομένων και να βρείτε αυτό που θέλετε.

|

||||

5. Κατεβάστε το PDF που θέλετε/χρειάζεστε μέσω του web interface και κάντε ότι θέλετε με αυτό. Μπορείτε ακόμη να το εκτυπώσετε και να το στείλετε, σαν να ήταν το αρχικό. Στις περισσότερες περιπτώσεις κανείς δεν θα το προσέξει ή θα νοιαστεί.

|

||||

|

||||

Αυτό είναι που θα πάρετε:

|

||||

|

||||

|

||||

|

||||

|

||||

## Documentation

|

||||

|

||||

Είναι όλα διαθέσιμα εδώ [ReadTheDocs](https://paperless.readthedocs.org/).

|

||||

|

||||

|

||||

## Απαιτήσεις

|

||||

|

||||

Όλα αυτά είναι πολύ απλά, και φιλικά προς τον χρήστη, μια συλλογή με πολύτιμα εργαλεία.

|

||||

|

||||

* [ImageMagick](http://imagemagick.org/) μετατρέπει τις εικόνες σε έγχρωμες και ασπρόμαυρες.

|

||||

* [Tesseract](https://github.com/tesseract-ocr) κάνει την αναγνώρηση των χαρακτήρων.

|

||||

* [Unpaper](https://www.flameeyes.eu/projects/unpaper) despeckles and deskews the scanned image.

|

||||

* [GNU Privacy Guard](https://gnupg.org/) χρησιμοποιείται για κρυπτογράφηση στο backend.

|

||||

* [Python 3](https://python.org/) είναι η γλώσσα του project.

|

||||

* [Pillow](https://pypi.python.org/pypi/pillowfight/) Φορτώνει την εικόνα σαν αντικείμενο στην python και μπορεί να χρησιμοποιηθεί με PyOCR

|

||||

* [PyOCR](https://github.com/jflesch/pyocr) is a slick programmatic wrapper around tesseract.

|

||||

* [Django](https://www.djangoproject.com/) το framework με το οποίο έγινε το project.

|

||||

* [Python-GNUPG](http://pythonhosted.org/python-gnupg/) Αποκρυπτογραφεί τα PDF αρχεία στη στιγμή ώστε να κατεβάζετε αποκρυπτογραφημένα αρχεία, αφήνοντας τα κρυπτογραφημένα στον δίσκο.

|

||||

|

||||

|

||||

## Σταθερότητα

|

||||

|

||||

Αυτό το project υπάρχει από το 2015 και υπάρχουν αρκετοί άνθρωποι που το χρησιμοποιούν, παρόλα αυτά βρίσκεται σε διαρκή ανάπτυξη (απλά δείτε πότε commit έχουν γίνει στο git history) οπότε μην περιμένετε να είναι 100% σταθερό. Μπορείτε να κάνετε backup την βάση δεδομένων sqlite3, τον φάκελο media και το configuration αρχείο σας ώστε να είστε ασφαλείς.

|

||||

|

||||

|

||||

## Affiliated Projects

|

||||

|

||||

Το Paperless υπάρχει εδώ και κάποιο καιρό και άνθρωποι έχουν αρχίσει να φτιάχνουν πράγματα γύρω από αυτό. Αν είσαι ένας από αυτούς τους ανθρώπους, μπορούμε να βάλουμε το project σου σε αυτήν την λίστα:

|

||||

|

||||

* [Paperless Desktop](https://github.com/thomasbrueggemann/paperless-desktop): Μια desktop εφαρμογή για εγκατάσταση του Paperless. Τρέχει σε Mac, Linux, και Windows.

|

||||

* [ansible-role-paperless](https://github.com/ovv/ansible-role-paperless): Ένας εύκολο τρόπος για να τρέχει το Paperless μέσω Ansible.

|

||||

|

||||

|

||||

## Παρόμοια Projects

|

||||

|

||||

Υπάρχει ένα άλλο ṕroject που λέγεται [Mayan EDMS](https://mayan.readthedocs.org/en/latest/) το οποίο έχει παρόμοια τεχνικά χαρακτηριστικά με το Paperless σε εντυπωσιακό βαθμό. Επίσης βασισμένο στο Django και χρησιμοποιώντας το consumer model με Tesseract και Unpaper, Mayan EDMS έχει *πολλά* περισσότερα χαρακτηριστικά και έρχεται με ένα επιδέξιο UI, αλλά είναι ακόμα σε Python 2. Μπορεί να είναι ότι το Paperless καταναλώνει λιγότερους πόρους, αλλά για να είμαι ειλικρινής, αυτό είναι μια εικασία την οποία δεν έχω επιβεβαιώσει μόνος μου. Ένα πράγμα είναι σίγουρο, το *Paperless* έχει **πολύ** καλύτερο όνομα.

|

||||

|

||||

|

||||

## Σημαντική Σημείωση

|

||||

|

||||

Τα scanner για αρχεία συνήθως χρησιμοποιούνται για ευαίσθητα αρχεία. Πράγματα όπως το ΑΜΚΑ, φορολογικά αρχεία, τιμολόγια κτλπ. Παρόλο που το Paperless κρυπτογραφεί τα αρχικά αρχεία μέσω του consumption script, το κείμενο OCR *δεν είναι* κρυπτογραφημένο και για αυτό αποθηκεύεται (πρέπει να είναι αναζητήσιμο, οπότε αν κάποιος ξέρει να το κάνει αυτό με κρυπτογραφημένα δεδομένα είμαι όλος αυτιά). Αυτό σημάνει ότι το Paperless δεν πρέπει ποτέ να τρέχει σε μη αξιόπιστο πάροχο. Για αυτό συστήνω αν θέλετε να το τρέξετε να το τρέξετε σε έναν τοπικό server σπίτι σας.

|

||||

|

||||

|

||||

## Δωρεές

|

||||

|

||||

Όπως με όλα τα δωρεάν λογισμικά, η δύναμη δεν βρίσκεται στα οικονομικά αλλά στην συλλογική προσπάθεια. Αλήθεια εκτιμώ κάθε pull request και bug report που προσφέρεται από τους χρήστες του Paperless, οπότε σας παρακαλώ συνεχίστε. Αν παρόλα αυτά, δεν μπορείτε να γράψετε κώδικα/να κάνέτε design/να γράψετε documentation, και θέλετε να συνεισφέρετε οικονομικά, δεν θα πω όχι ;-)

|

||||

|

||||

Το θέμα είναι ότι είμαι οικονομικά εντάξει, οπότε θα σας ζητήσω να δωρίσετε τα χρήματα σας εδώ [United Nations High Commissioner for Refugees](https://donate.unhcr.org/int-en/general). Κάνουν σημαντική δουλειά και χρειάζονται τα χρήματα πολύ περισσότερο από ότι εγώ.

|

||||

82

README.md

Normal file

@@ -0,0 +1,82 @@

|

||||

*[Greek](README-el.md)*

|

||||

|

||||

# Paperless

|

||||

|

||||

[](https://paperless.readthedocs.org/) [](https://gitter.im/danielquinn/paperless) [](https://travis-ci.org/danielquinn/paperless) [](https://coveralls.io/github/danielquinn/paperless?branch=master) [](https://github.com/danielquinn/paperless/blob/master/THANKS.md)

|

||||

|

||||

Index and archive all of your scanned paper documents

|

||||

|

||||

I hate paper. Environmental issues aside, it's a tech person's nightmare:

|

||||

|

||||

* There's no search feature

|

||||

* It takes up physical space

|

||||

* Backups mean more paper

|

||||

|

||||

In the past few months I've been bitten more than a few times by the problem of not having the right document around. Sometimes I recycled a document I needed (who keeps water bills for two years?) and other times I just lost it... because paper. I wrote this to make my life easier.

|

||||

|

||||

|

||||

## How it Works

|

||||

|

||||

Paperless does not control your scanner, it only helps you deal with what your scanner produces

|

||||

|

||||

1. Buy a document scanner that can write to a place on your network. If you need some inspiration, have a look at the [scanner recommendations](https://paperless.readthedocs.io/en/latest/scanners.html) page.

|

||||

2. Set it up to "scan to FTP" or something similar. It should be able to push scanned images to a server without you having to do anything. Of course if your scanner doesn't know how to automatically upload the file somewhere, you can always do that manually. Paperless doesn't care how the documents get into its local consumption directory.

|

||||

3. Have the target server run the Paperless consumption script to OCR the file and index it into a local database.

|

||||

4. Use the web frontend to sift through the database and find what you want.

|

||||

5. Download the PDF you need/want via the web interface and do whatever you like with it. You can even print it and send it as if it's the original. In most cases, no one will care or notice.

|

||||

|

||||

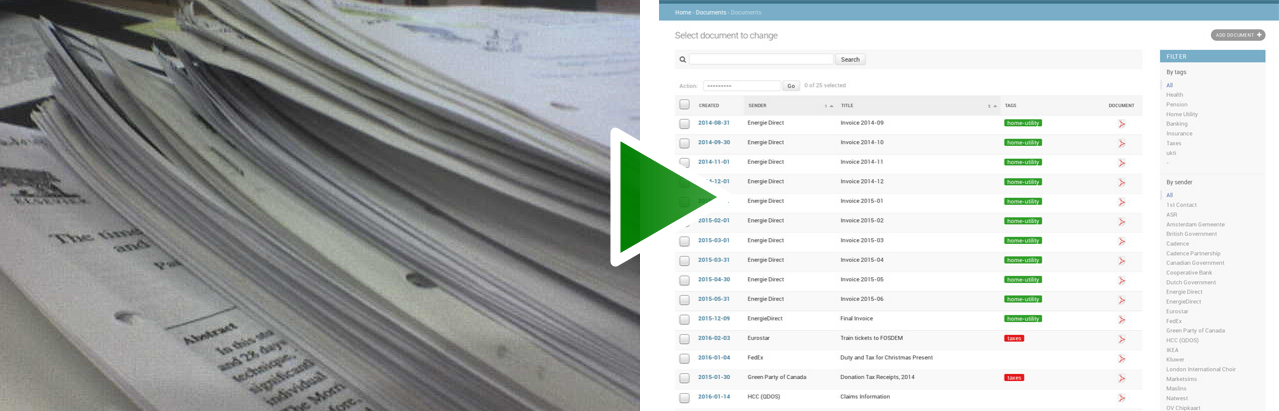

Here's what you get:

|

||||

|

||||

|

||||

|

||||

|

||||

## Documentation

|

||||

|

||||

It's all available on [ReadTheDocs](https://paperless.readthedocs.org/).

|

||||

|

||||

|

||||

## Requirements

|

||||

|

||||

This is all really a quite simple, shiny, user-friendly wrapper around some very powerful tools.

|

||||

|

||||

* [ImageMagick](http://imagemagick.org/) converts the images between colour and greyscale.

|

||||

* [Tesseract](https://github.com/tesseract-ocr) does the character recognition.

|

||||

* [Unpaper](https://www.flameeyes.eu/projects/unpaper) despeckles and deskews the scanned image.

|

||||

* [GNU Privacy Guard](https://gnupg.org/) is used as the encryption backend.

|

||||

* [Python 3](https://python.org/) is the language of the project.

|

||||

* [Pillow](https://pypi.python.org/pypi/pillowfight/) loads the image data as a python object to be used with PyOCR.

|

||||

* [PyOCR](https://github.com/jflesch/pyocr) is a slick programmatic wrapper around tesseract.

|

||||

* [Django](https://www.djangoproject.com/) is the framework this project is written against.

|

||||

* [Python-GNUPG](http://pythonhosted.org/python-gnupg/) decrypts the PDFs on-the-fly to allow you to download unencrypted files, leaving the encrypted ones on-disk.

|

||||

|

||||

|

||||

## Project Status

|

||||

|

||||

This project has been around since 2015, and there's lots of people using it. For some reason, it's really popular in Germany -- maybe someone over there can clue me in as to why?

|

||||

|

||||

I am no longer doing new development on Paperless as it does exactly what I need it to and have since turned my attention to my latest project, [Aletheia](https://github.com/danielquinn/aletheia). However, I'm not abandoning this project. I am happy to field pull requests and answer questions in the issue queue. If you're a developer yourself and want a new feature, float it in the issue queue and/or send me a pull request! I'm happy to add new stuff, but I just don't have the time to do that work myself.

|

||||

|

||||

|

||||

## Affiliated Projects

|

||||

|

||||

Paperless has been around a while now, and people are starting to build stuff on top of it. If you're one of those people, we can add your project to this list:

|

||||

|

||||

* [Paperless Desktop](https://github.com/thomasbrueggemann/paperless-desktop): A desktop UI for your Paperless installation. Runs on Mac, Linux, and Windows.

|

||||